I was working with a customer who made their journey to the cloud, and as part of their old infrastructure, they have a server that receives backup dumps from multiple servers, which was becoming a single point of failure. Also, the same server was used to move data between different environments, which sometimes involves placing the files in a temporary area to be moved to a non-routed environment (Dev, Test, and UAT, to mention a few). The last detail was a large amount of data that required fast response time to be moved around either when restoring the original environment or copying to a different location.

They were looking for a more modern solution to address some of the current issues. The focus was to ensure that the data being centralized on that server was duplicated and perhaps replaced altogether. There are several options available, such as Azure Backup, Azure files, and so forth.

After a few discussions and some proposed ideas, we worked on a solution that would be easier to use, secure, and reliable. Our goal was set to accomplish the requirements using Storage Accounts. The proposed solution was using Storage Accounts with the AzCopy utility to copy the data from the server to the storage area. Be aware that an updated version of AzCopy (AzCopy V10.10.0) has just been released. The updated version contains some new features and a slew of fixes for bugs that popped up in earlier versions.

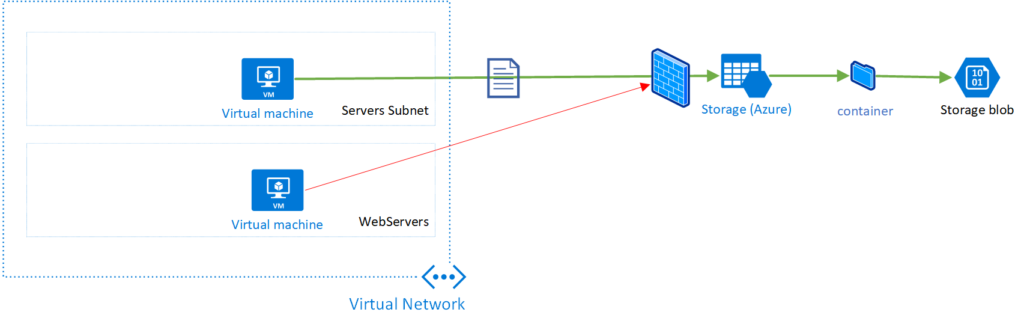

To improve the security of the overall solution, we will configure the firewall and virtual network feature at the Storage Account level, where only connections from a specific subnet will be allowed to communicate with the storage account. On top of that, we are going to create a system managed identity in the virtual machine and assign an RBAC (role-based access control) only to that same VM to be able to write data to the Storage Account.

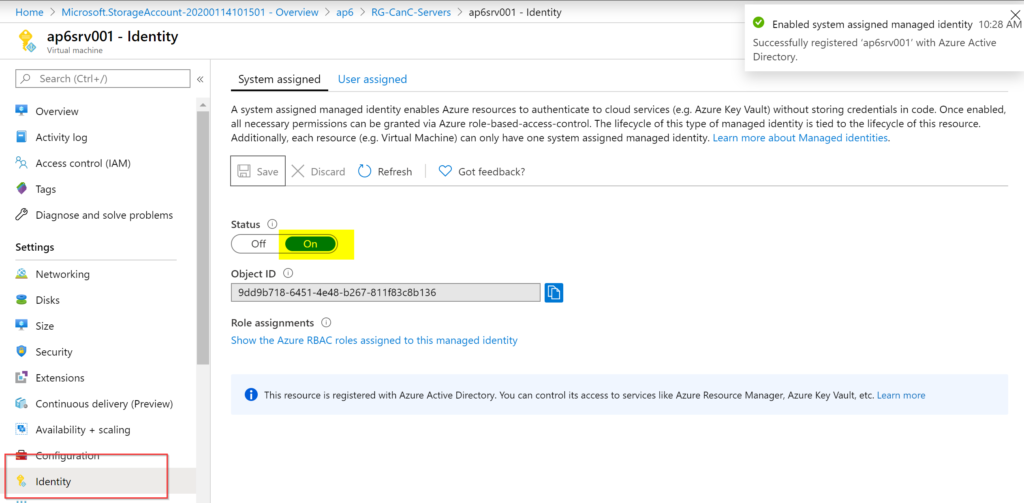

A system-managed identity is enabled at the VM level, and it creates an identity for the VM in Azure Active Directory. That identity is unique to the VM, and it will exist while the VM exists and cannot be passed around.

The process to copy data to other environments was simplified because the customer could use a solution like Storage Explorer to see the files and download the required files. Note: The virtual network/IPs for those environments must be allowed in the Storage firewall and virtual network area first.

The high-level design of the solution is depicted in the image below, where the green lines show allowed traffic and red lines show denied traffic (in case that VM tries to connect).

Configuring the virtual machine identity

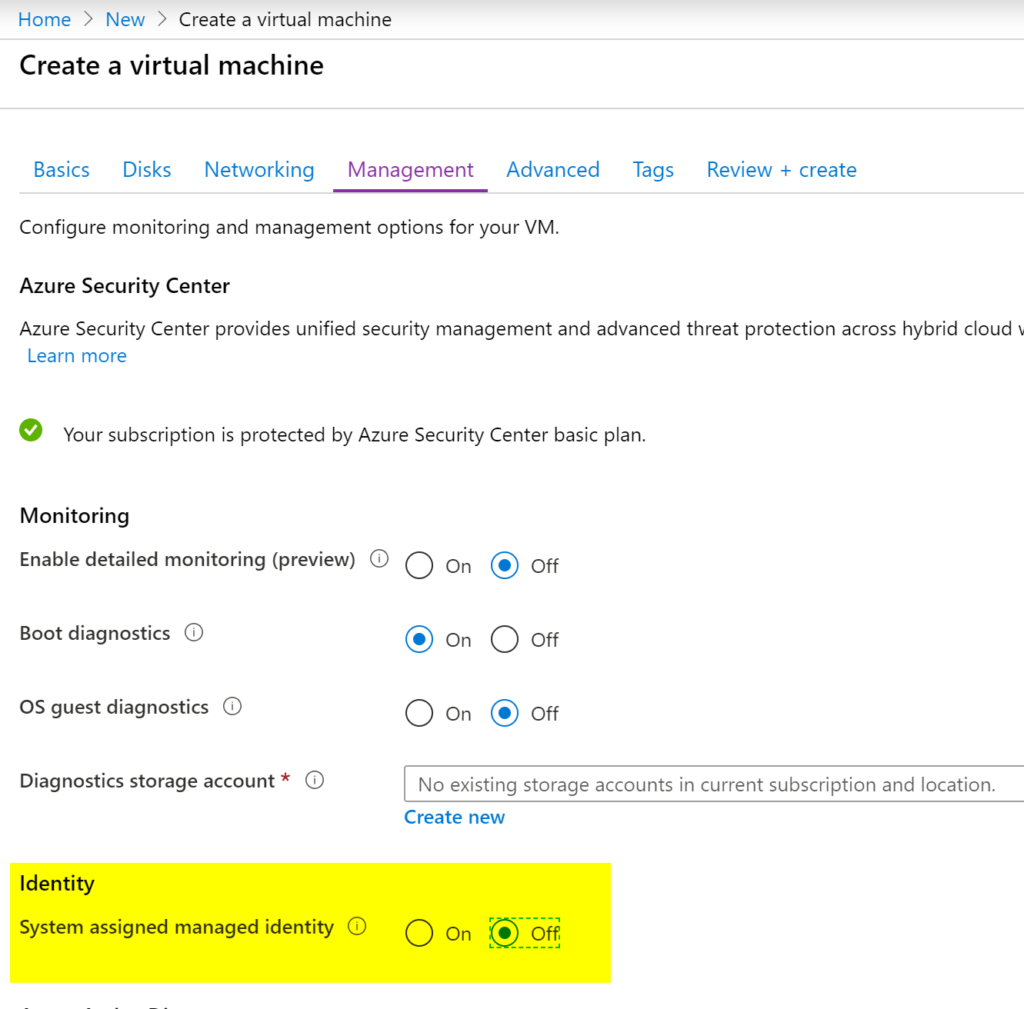

The first step in this article is to enable a System Managed Identity to the VM that is going to copy data to our Storage Account. We can activate during the provision of the VM in the Management tab, as depicted in the image below.

If the VM was created without that option, the process to enable it is straightforward. In the desired VM blade properties, click on Identity, switch the Status to On and then Save. A dialogue box will ask to confirm the registration of the VM with Azure Active Directory. Click on Yes to confirm. The result should be similar to the image depicted below.

Creating a Storage Account

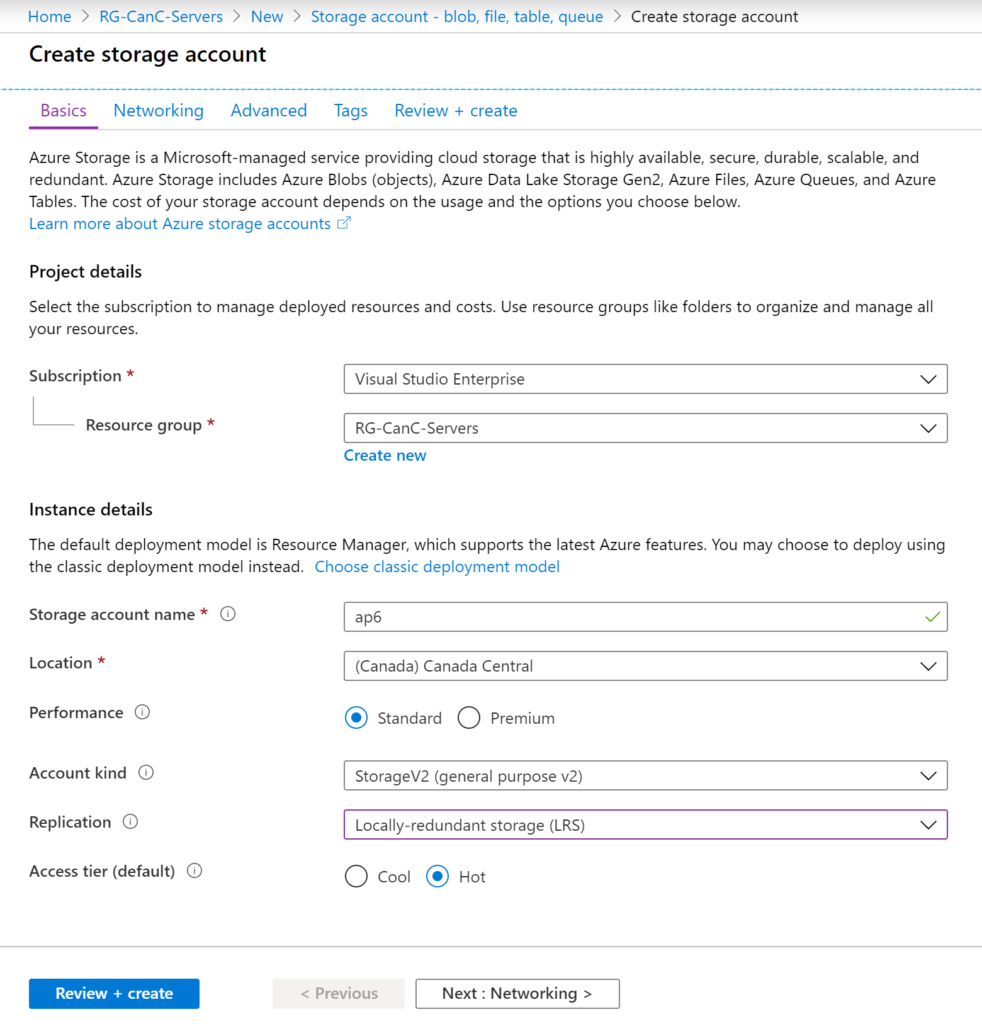

Our first step is to create a Storage Account to be our temporary Storage Account that will be the repository of our files. To create a new Storage Account using Azure Portal, click on Create a Resource located on the top left corner of the Azure Portal. Type in Storage Account and hit enter. In the result page, click on Storage account – blob, file, table, queue, and in the new blade, click on Create.

In the Create storage Account wizard, define the resource group, Storage Account name, and location based on your requirements. In this article, because we are going to use small files, we are going to use Standard performance, StorageV2 type, Local Redundant Storage (LRS) as a replication method, and Hot as tier.

The performance is a discussion point when deciding which Storage Account to use. You can always try both performance options and find the balance between performance and cost for your solution.

After creating the Storage Account, we will lock it down to be accessible only from the subnet where the VM that is going to upload the files is located.

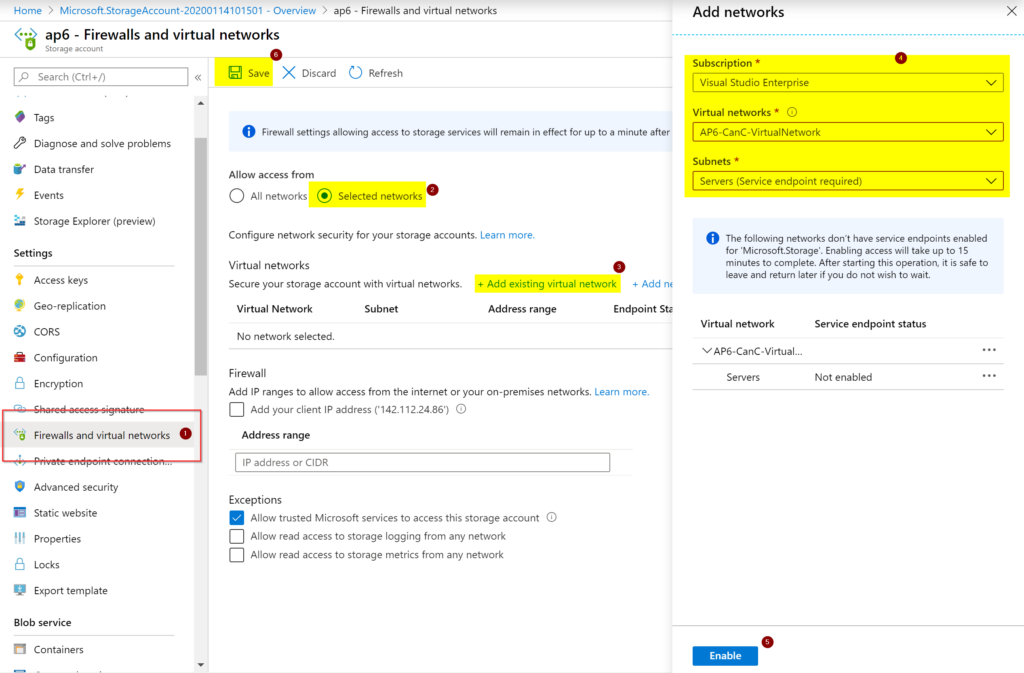

Click on the Storage Account, and click on Firewall and virtual networks (Item 1). In the new blade, select Selected networks (Item 2), click on add existing virtual network (Item 3). A new blade will show up on the right side, where we need to define the virtual network and subnet (Item 4). Choose the subnet where the server that will use this Storage Account is located and click on Enable (item 5).

To save the entire process that we performed so far, click on Save (Item 6).

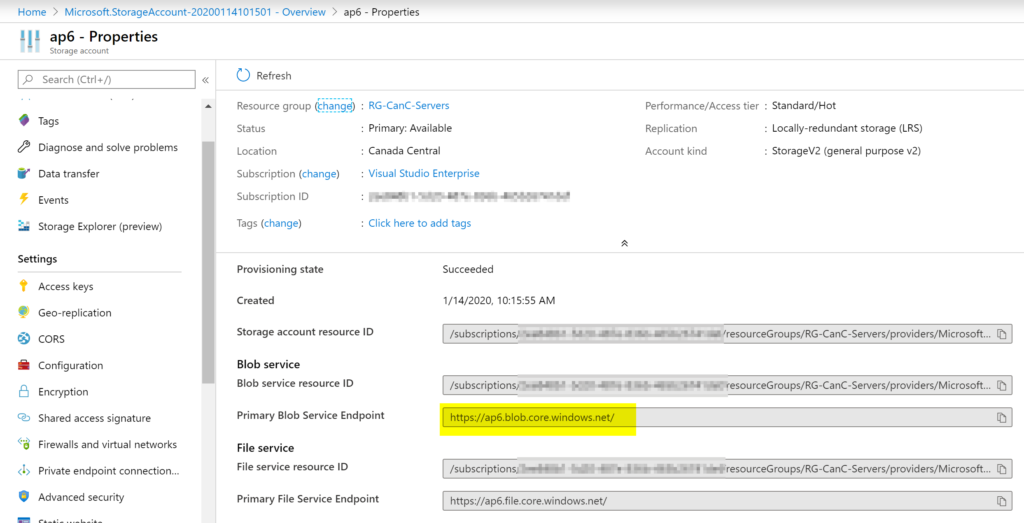

When copying the files, we will need the Primary Blog Service Endpoint for our current Storage Account. To obtain that information, click on Properties at the Storage Account level and copy the information as depicted in the image below.

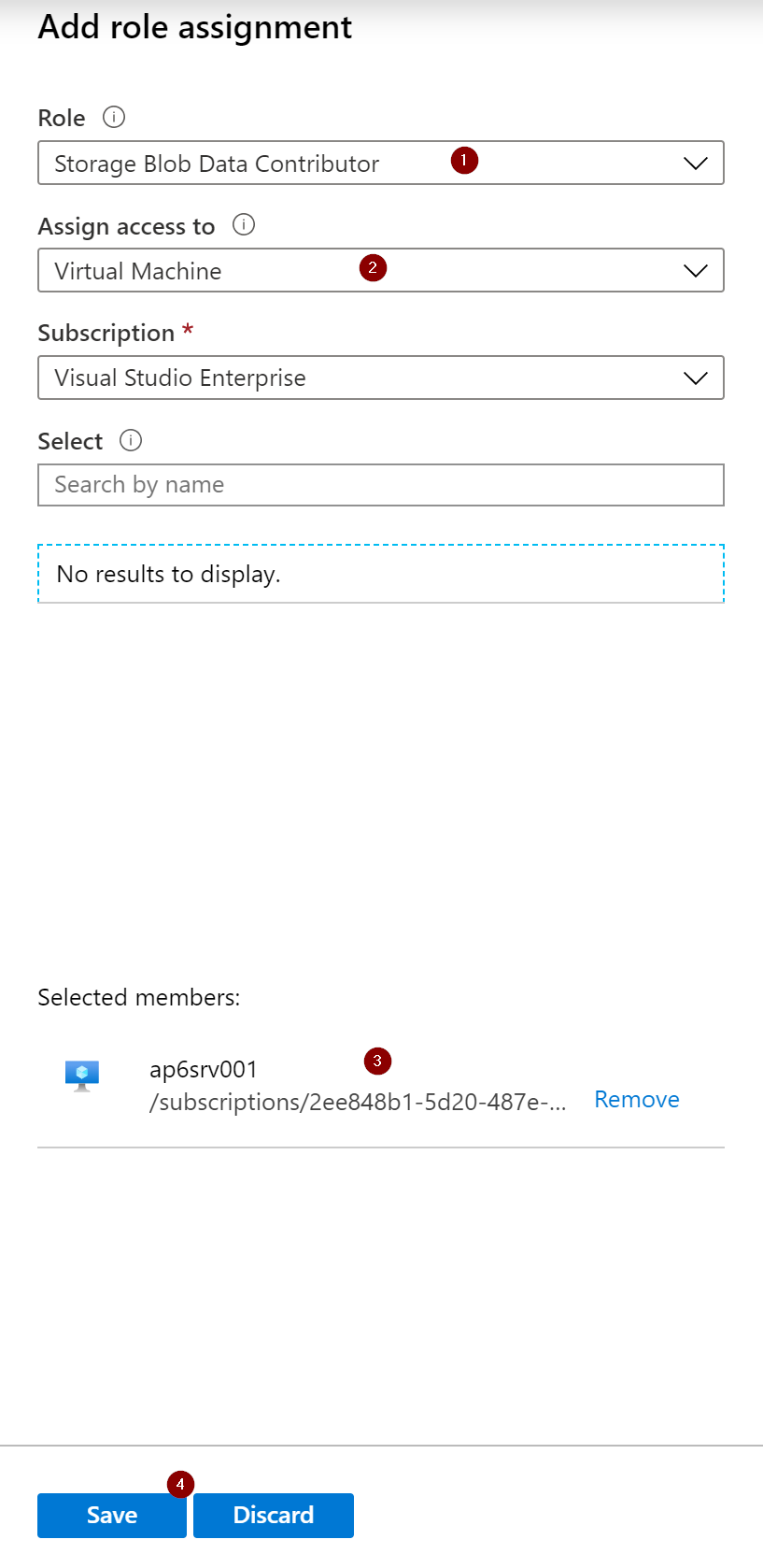

The final step is to assign a role assignment at the Storage Account level to the virtual machine that we have just created a managed identity. In the Storage Account blade properties, click on Access Control (IAM). In the new blade, click on +Add , Add Role Assignment.

In the new blade, select Storage Blob Data Contributor role (Item 1), select virtual machine (Item 2) in the Assign access to (Item 3), and select the desired VM from the list and click on Save (Item 4)

Copying data from the VM to the Storage Account

Logged on the virtual machine, copy the AzCopy utility. It is a single executable, no installation required, save it on a folder (C:\Windows if you want to use it from anywhere on your VM). To download AzCopy in a ZIP file, click here.

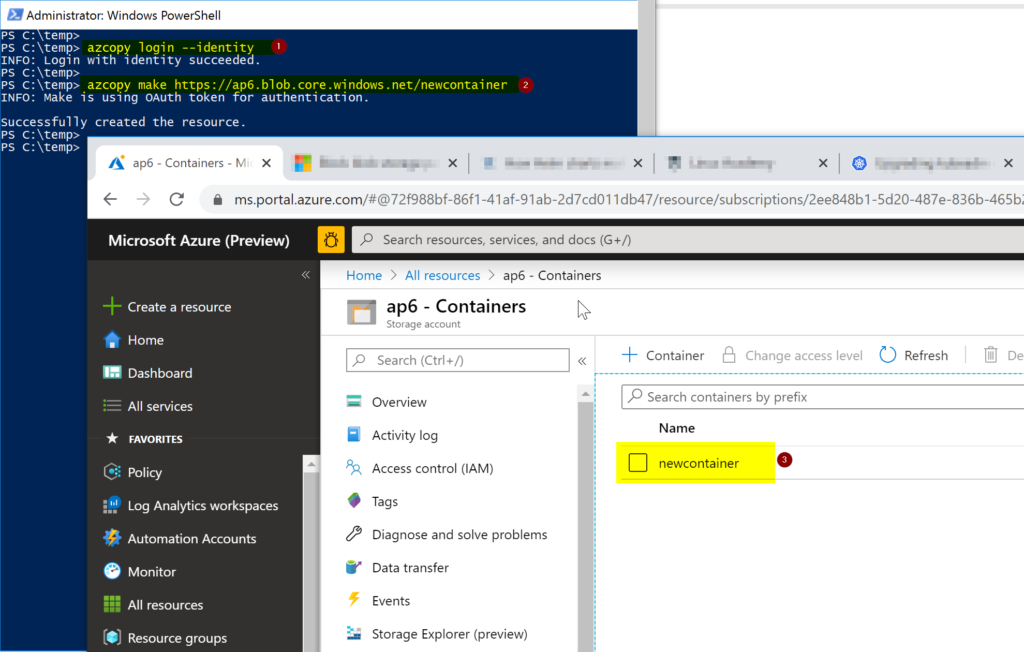

The first two commands that we are going to execute is to make sure that we can establish a session, and then we will create a container in the Storage Account. The first one (Item 1) will use the VM-managed identity, and the second one (Item 2) will create a new container. The result of both actions can be seen at the Storage Account\Container level (Item 3).

AzCopy login --identity AzCopy make https://ap6.blob.core.windows.net/newcontainer

The process to copy an entire folder from the current server to the Storage Account (the syntax is listed below) can be used. The AzCopy utility provides a great summary when the copy is complete.

AzCopy copy ‘c:\temp’ https://ap6.blob.core.windows.net/newcontainer --recursive

This article has been updated with new information.

Featured image: Designed by Macrovector / Freepik

Great article. We are currently using something similar to move files from a VM to an web accessible directory.

Do you need to “authenticate” each time you run your script or is that token always available now?

Also, it’s worth noting the log files that pile up and should be part of regular maintenance.

Hi Warren,

You can authenticate and logoff every time that you run the script, if you prefer. Meaning authentication is the process to use –identity (no password or whatsoever).

You are right about the logs, good catch!