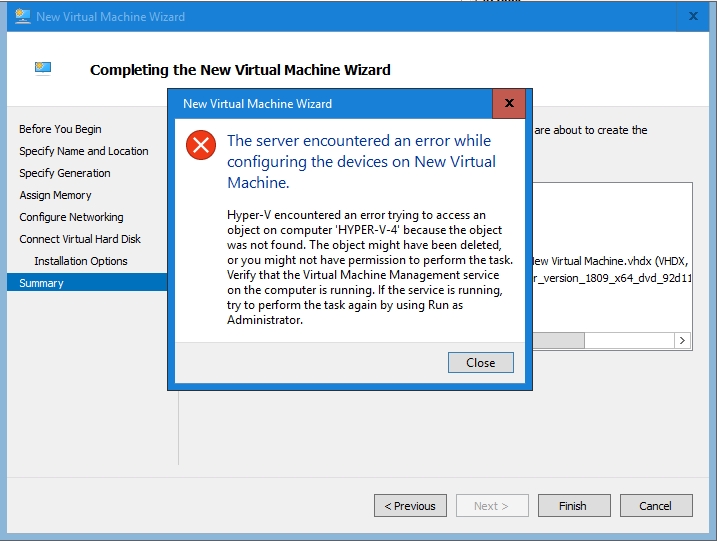

Recently, I tried to create a new virtual machine on a normally reliable Hyper-V server only to receive an error message. The error message, which you can see in the screenshot below, states that I might not have permission to create the virtual machine, or that the Virtual Machine Management Service might not be running.

I knew right away that the error message was misleading. I have the required permissions to create a VM, and I verified that the Virtual Machine Management Service was running. Furthermore, other virtual machines on the host seemed to be working normally.

After attempting the virtual machine creation process a few times, I noticed that the virtual machine was actually being created and that the failure was occurring at one of the later stages of the VM creation process. After a bit more trial and error, I found that the failure was occurring when Hyper-V would attempt to mount an ISO file into a virtual DVD drive. If I instead chose the option to install an operating system later on, then the virtual machine creation process would succeed.

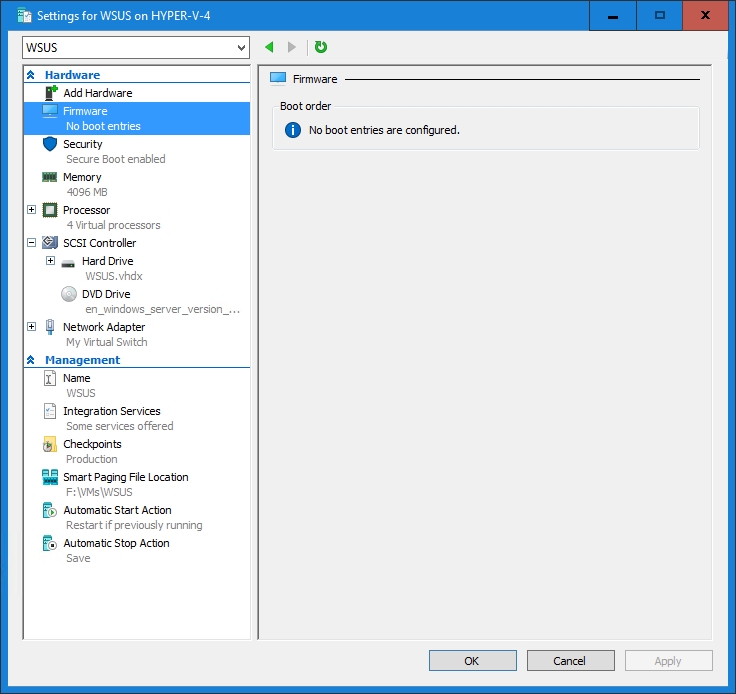

After creating the virtual machine, I went into the VM settings and added a virtual DVD drive and mapped it to the ISO file containing the operating system that I wanted to install. Everything seemed fine until I tried booting the VM. The VM essentially ignored the virtual DVD drive and defaulted to a PXE boot. Assuming that the problem must be related to the VM’s firmware, I opened the VM’s Settings window within the Hyper-V Manager and went to the Firmware tab. I was greeted by a simple message saying No Boot Entries are Configured. You can see what the error looks like below.

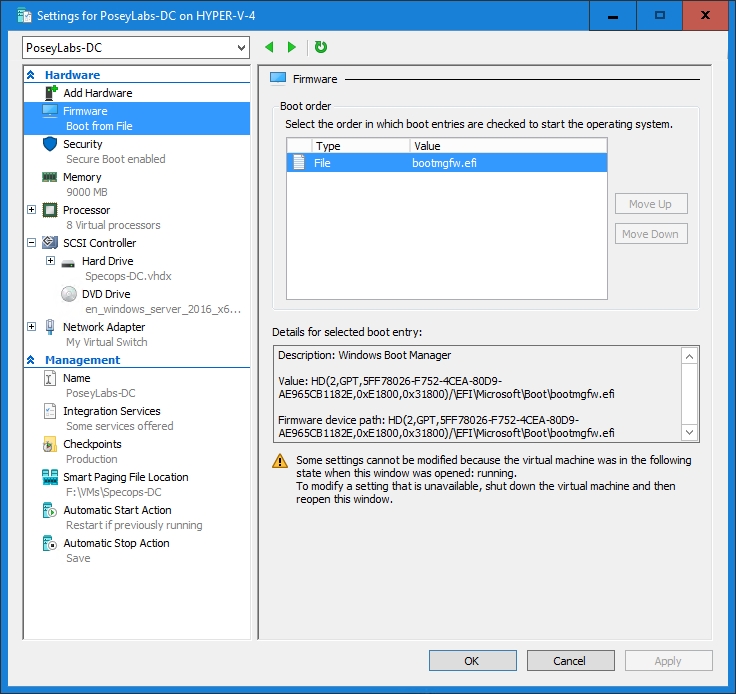

Normally, the Firmware tab lists the available boot entries and allows you to choose which one you want to use. Below, for example, is a screen capture taken from a properly functioning VM residing on the same host where the problems are occurring.

Time to fire up PowerShell

Since the Hyper-V Manager would not allow me to choose a boot device, I turned to PowerShell. The Get-VMFirmware cmdlet lists the boot order of a specified virtual machine. As you can see in the next figure, my problem VM (WSUS) is configured to boot from the network. In contrast, a functional VM (PoseyLabs-DC) is configured to boot from a file.

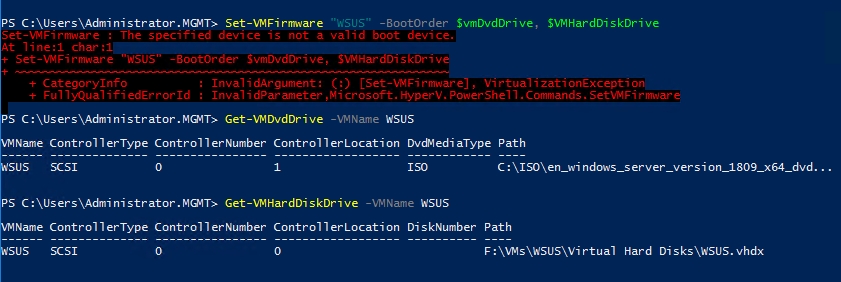

Thinking that I could use the Set-VMFirmware cmdlet to fix the problem, I entered the following command:

Set-VMFirmware “WSUS” -BootOrder $vmDvdDrive, $vmHardDiskDrive

In this case, WSUS is the name of my VM. The $VmDvdDrive values gets the VM’s DVD drive by using the Get-VmDVDDrive cmdlet. Similarly, the $VmHardDiskDrive value is linked to the Get-VmHardDiskDrive cmdlet.

At any rate, the command failed with a message indicating that my specified boot device was not valid. I actually ran the Get-VmDvdDrive and Get-VmHardDiskDrive cmdlets as a way of verifying the VM’s drives, and those commands were successful as shown below.

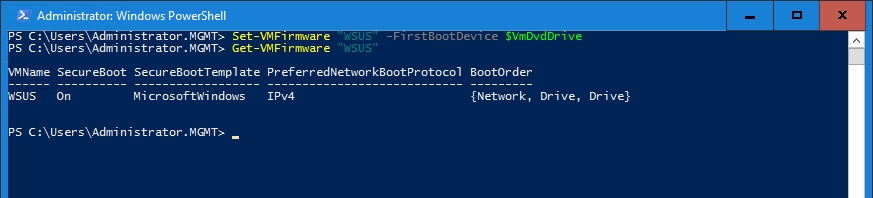

The next thing that I tried was a variation of the Set-VMFirmware command. Rather than specifying the boot order, I tried to specify the first boot device by using the FirstBootDevice parameter. Although this command did not return an error message, it ultimately did not have any effect on the VM, as shown below.

At that point, I was kind of at a loss as to what the problem might be. I spent quite a bit of time browsing the server’s event logs looking for clues as to the source of the problem, but the event logs weren’t of any help. I also tried a few other tricks such as restarting the Hyper-V Virtual Machine Manager Service and tinkering with the BCDEdit utility. However, none of that helped me to solve the problem.

After a few frustrating hours of troubleshooting, I stumbled upon a message thread in which someone suggested that a fix that was designed to make Hyper-V manageable from Windows 10 breaks Generation 2 virtual machines. The proposed solution was to remove and reinstall the Hyper-V role. My problem did not completely match what was being described within the message thread, but I decided to try removing and reinstalling the Hyper-V role anyway.

One problem solved, another problem created

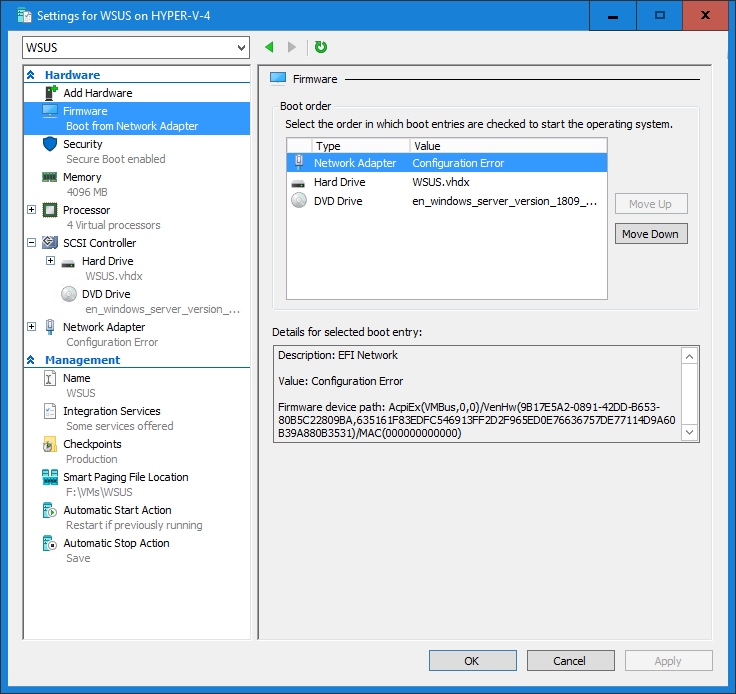

To make a long story short, removing and then reinstalling the Hyper-V role fixed one problem, but caused another. If you look at the screenshot below, you can see that following the reinstallation, the VM’s boot order became accessible. Incidentally, I did not recreate the virtual machine. Windows retained all of my virtual machines throughout the role removal and reinstallation.

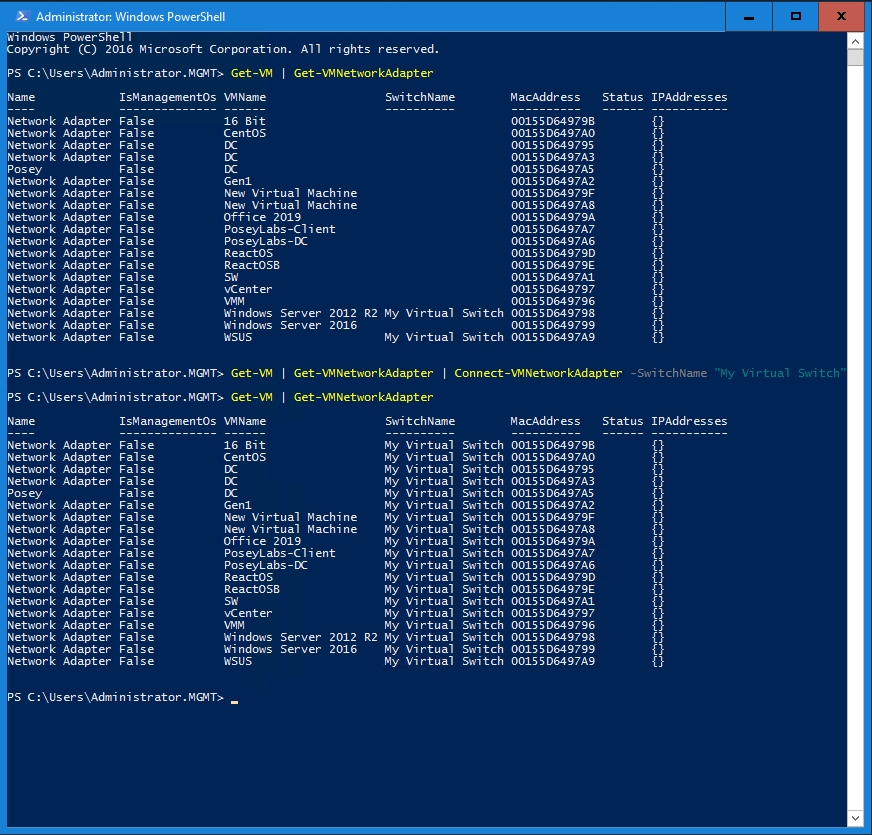

If you look closely at the above screenshot, you will notice that the Network Adapter is listed as having a configuration error. The reason why this happened was because the Hyper-V role reinstallation broke my virtual switch. To fix the problem, I simply renamed the default Hyper-V virtual switch to match the name of my virtual switch that no longer existed after the role reinstallation. Unfortunately, I had to connect all of my virtual machines to the newly renamed virtual switch. Since I only had about a dozen virtual machines, I thought about using the Hyper-V Manager for this task, but I decided to use PowerShell instead. Being that my virtual switch is named My Virtual Switch, I used the following command:

Get-VM | Get-VMNetworkAdapter | Connect-VMNetworkAdapter -SwitchName “My Virtual Switch”

If you look at the screenshot above, you can see that all but two of my VMs (which I had already manually reconnected) had lost connectivity to the virtual switch. After running the command shown above, connectivity was restored.

Why did Hyper-V fail? Who knows!

Ultimately, I have no idea why Hyper-V suddenly lost the ability to boot newly created VMs. I can only assume that perhaps there was a buggy update. In any case, reinstalling the Hyper-V role and reattaching the virtual switch fixed my problem.

Featured image: Shutterstock

it failed because your os in hyper v needs to load from ide and not scsi…. you can create scsi for data partitions

simply create an ide drive…. remove the scsi and point the ide drive to the vhd file

Thanks for the tip, but I have lots of Hyper-V VMs that boot from SCSI drives without any problem. I think that using an IDE drive was a requirement at one time, but that requirement no longer exists. Thanks again.

Just enjoying this same issue. Appreciate the thorough documentation of your troubleshooting steps. Unbelievable that reinstall the role is still the best solution eight months later.

@Dan O. I hate to hear that you were having problems, but I am also glad to know that it wasn’t just me who had this particular issue. I was beginning to wonder if it was a one off situation.

Have been running (type 2) Hyper-V VMs under Win10 for years, but am unable to create/start new generation 2 Linux VMs — still trying to troubleshoot, thank you for the resource.

> Windows 10 Pro Version 21H1

[ Debian 10.9 Generation 2 VM ]

Some Linux builds will only work with Generation 1 VMs. Gen 2 support for Linux has improved tremendously in recent years, but only for specific Linux releases. I hope that helps.