One of the first concepts that computer science students are introduced to is that of Moore’s Law. Somewhat ironically though, Moore’s Law has become somewhat distorted over the years. When it was introduced way back in the 1960s, Moore’s Law stated that the number of transistors that can be placed into one square inch will double every year (although some sources say 18 months).

I have to admit that I seriously thought about not writing this article because there is so much ambiguity surrounding Moore’s Law. I have heard quite a few different variations of the law, with the most common being that available computing power (as opposed to the number of transistors that can fit into one square inch) will double every 18 months. I have also seen sources that indicate that Moore’s Law stopped working years ago, while others claim that Moore’s Law is still working to this day.

Moore’s Law and a limit to limitless

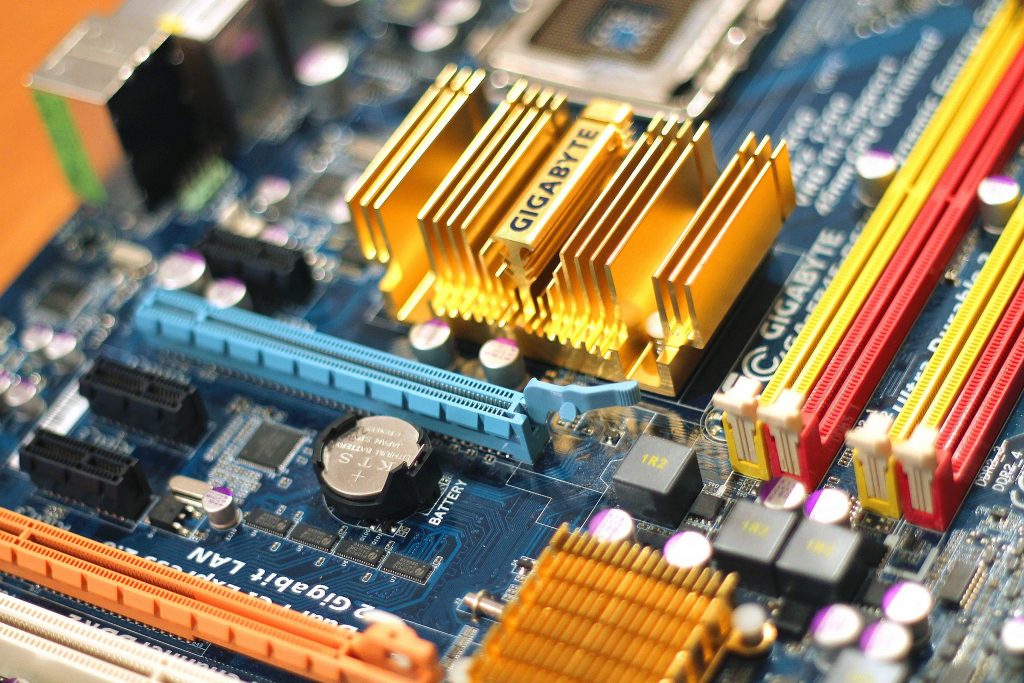

Regardless of how you define Moore’s Law, there are limits to the amount of computing power that current technology can produce. Chipmakers are already producing CPUs with 14nm transistors. These transistors only contain a few dozen atoms (roughly 67). Further reducing the size introduces problems stemming from quantum tunneling, but there are plans to eventually create processors that utilize 5nm transistors.

The reason why I mention this is because even the current generation CPUs feature transistors that are made up of fewer than 100 atoms. Think about that for a second. Cells in the human body can range between 5,000 and 100,000 nanometers in size. A 14nm transistor is truly minuscule.

There will eventually come a day when an atom’s size becomes the limiting factor (assuming that there is a way to overcome the challenges of quantum tunneling that must be dealt with first).

For those who prefer the much broader definition of Moore’s Law, which states that available computing power, not transistors, doubles every 18 months, then the challenge becomes an issue of how to get that computing power. One option is to create physically larger and larger CPUs, thereby increasing the transistor count. However, that approach presents challenges related to the ability to create chips of that size with the required level of precision. There would also likely be issues with heat dissipation. Even the speed of electricity could one day become a limiting factor.

So long, silicon?

So, what would it take for Moore’s Law to continue to work well into the distant future? The solution that I hear discussed most often is that of building CPUs from something other than silicon. Gallium Arsenide, for example, has occasionally been pitched as an alternative to silicon.

In my opinion, the use of exotic materials will probably be an important stepping stone in the quest to produce ever more powerful CPUs, but I doubt that it will be the basis for a solution that keeps Moore’s Law working indefinitely. Any substance, regardless of what it is, has certain molecular properties. These properties will ultimately define the substance’s limitations. Overcoming these limits will ultimately be a matter of coming up with new microprocessor architectures that are radically different from those used today.

Being that I do not work for a chip manufacturer, I have no way of knowing what concepts those companies are exploring for futuristic microprocessors, but I do have a few ideas of my own.

One possibility is to base logic circuits on something other than the transistor. I have no idea what might replace the transistor (aside from quantum bits), but there are a couple of reasons why I think that the chip manufacturers need to look beyond the transistor.

First, the transistor is nearing its scalability limits. As previously mentioned, there are major technical challenges associated with creating transistors that are smaller than 14nm.

Transistors are showing their age

The second reason why I think it’s time to look for a different technology is because the transistor is ancient technology. Bell Laboratories invented the transistor way back in 1947. That was 72 years ago! Of course, I realize that the microscopic transistors used in modern integrated circuits are vastly different from the transistor that Bell Laboratories created so long ago, but modern transistors still work in essentially the same way as their nearly century-old counterpart.

Incidentally, the computer predates the transistor. Early computing devices were based on the use of vacuum tubes. I’m certainly not suggesting that we go back to using vacuum tubes, but rather I am making the point that there is no universal law mandating that computers be based on the use of transistors.

Another way in which computers of the future might get a boost in processing power is by utilizing harmonic waves. This idea is a little bit difficult to explain, but it is something that I have been working on for a long time.

The best real-world analogy to the harmonic wave concept is music. If you forget about sharps and flats, there are only seven musical notes (A, B, C, D, E, F, and G). Even so, there are vast differences in the way that various musical artists sound from one another. Iron Maiden, for example, sounds absolutely nothing like Britney Spears, even though both are limited to using the same seven notes.

Additionally, musical notes can be grouped into octaves. There are high Cs and low Cs, for example. The note sounds different depending on which octave it is played in.

Now, imagine that those notes are not actually notes, but rather data. I envision a chip that can perform rapid frequency shifts (similar to switching octaves or switching instruments) on the various data streams, but with the frequency itself acting as part of the data.

It’s very possible that this concept will end up being better suited for use in storage or broadband transmission rather than the CPU. Still, it’s a new way of thinking about data processing.

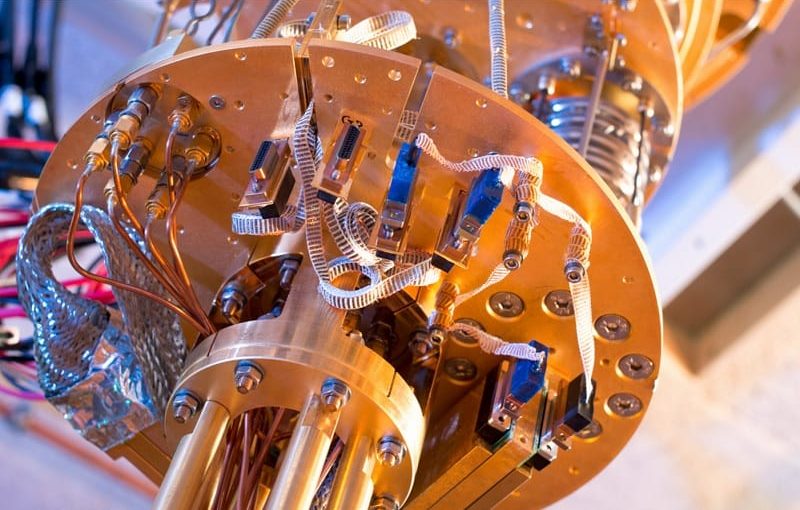

Quantum computing is the future

Ultimately, I think that the quantum computer will probably replace traditional silicon models, at least in situations that demand the greatest amount of processing power available. Of course, quantum computing poses its own challenges. Current generation quantum computers have to maintain a cryogenic state, and they cannot run conventional software.

Even so, I don’t think that we have yet reached the limit of what can be done with more conventional CPUs. MIT Technology Review recently published an article explaining that a team at MIT had successfully created a chip that was based on the use of carbon nanotubes. Each tube has the diameter of a single carbon atom.

According to the story, carbon nanotube transistors outperform their silicon counterparts but are up to 10 times more energy efficient. Perhaps most importantly, however, the chip that was created by MIT can run conventional software.

In spite of all of its advantages, carbon nanotube-based processors are not ready for general use. The manufacturing process creates two different types of nanotubes. One type is perfect for use in digital electronics, while the second type undermines the circuit’s performance. The next challenge will be for researchers to figure out a way to isolate the two different types of nanotubes from one another so that a chip can be created using only the desirable type of nanotube.

Featured image: Pixabay