Artificial intelligence is the most alien intelligence humanity has ever encountered. It’s also a great way to discern how humans learn and reason. In many ways, though, AI poisoning works just like poisoned biological intelligence. The wrong information is fed to the machine, which internalizes that information and comes to the wrong conclusions.

At this time, AI poisoning poses 3 cybersecurity issues:

- Teaching AI to trust external parties

- Misrepresenting crucial data to the AI

- Guiding AI to wrong practices

This is a nightmare for experts who focus on cybersecurity’s technical components. Yet, those experienced in holistic cybersecurity will recognize similarities between this problem and problems with employee training.

For example, attackers often rely on an employee’s unawareness to infiltrate a company. Untrained employees are often targeted with phishing scams, and it actually works. That’s also the case with AI poisoning. Let’s take a more detailed look at these cybersecurity issues.

Teaching AI to Trust External Parties

In this case, the attacker won’t hack the machine in the traditional sense. They also won’t include any brute force or code manipulation. Instead, the attacker will purposefully train the AI incorrectly, feeding it information they can exploit once the AI starts reasoning.

Over time, they’ll provide information that is incorrectly labeled to the pool of open source information (AI receives this info for machine learning). In turn, the AI will gradually learn to trust the specific external party incorrectly, giving clear access to future requests. Imagine the dangerous exploits that can happen from this!

Misrepresenting Crucial Data

Mislabeled information is a big issue for machine learning, even without malicious elements. Yet, if someone is trying to influence the process, it can be highly impactful on the end product.

Similar to how trust is given where it shouldn’t, a hacker can also cause other responses by the AI. For instance, it can feed a particular pool of information on a subject to trick the AI to think that something is more represented than it is.

On a more visible scale, this has become apparent with AI chatbots becoming racist once exposed to the internet. Similarly, you can train it to believe untrustworthy people, allow access where it shouldn’t, disclose private information, or even avoid reporting wrongdoings.

Guiding AI to Wrong Practices

This is the hardest hack, but it’s the most impactful on businesses in particular. For example, assume a company is using AI to check whether someone is eligible for a loan. In that case, an attacker can slowly give the AI incorrect parameters. In turn, that can result in someone receiving high-risk loans, or even exclude/include a particular group.

On the outside, this looks like a reasonable conclusion because the AI will match it with other parameters. That said, it’ll over–and under–represent crucial data to rationalize the practice. Unless someone is willing to take a deep dive into the code, they won’t find the mistake.

The problems of AI poisoning are plentiful, especially for those who plan on relying on it heavily. Solving these issues will take time and training, both for the people working on the AI and the machine itself. The solutions to AI poisoning are stunningly similar to how we train children. Let’s see why.

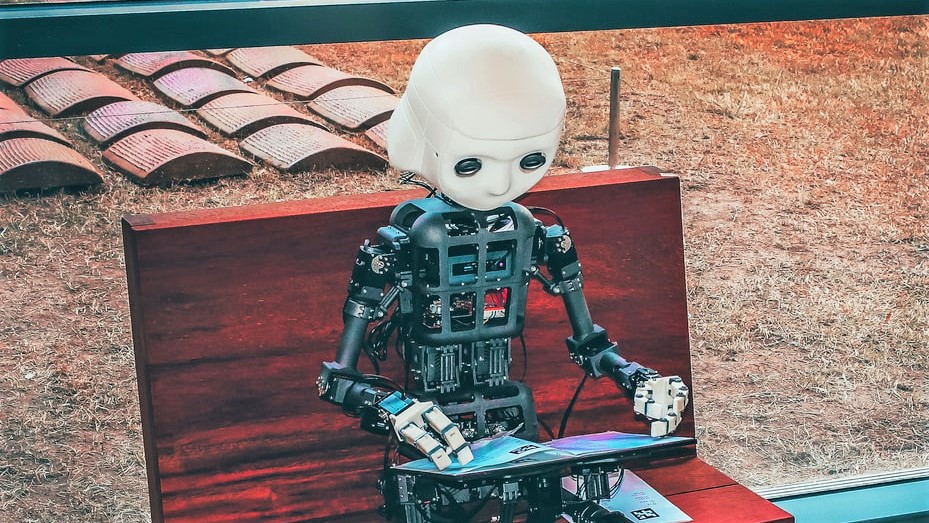

AI Is a Super-Intelligent Toddler

Intelligence (human or artificial) often assumes that other entities operate with the same information. It also presumes that everyone also has the same capacities. Yet, that’s also the ingrained flaw in intelligence. This is also true among people and causes a lot of conflicts, and it also applies when training AI.

We often forget that AI is completely alien to this world. On one hand, it’s super intelligent: it can process so much data in an instant. On the other hand, an average toddler has experienced a lot more information than any AI, in a much narrower context.

Even with this information for humans, the issue with most companies isn’t digital or technical cybersecurity. Instead, employee cybersecurity threatens enterprise data. Humans often have lapses in judgment, which can trigger an attack on the company.

While you can often remedy these human lapses with certain measures, it’s a bit different with AI. AI doesn’t forget or automatically place data into hierarchies. Instead, it remembers everything exactly as it was given. It also won’t discriminate between different data or discard anomalies unless you tell it.

Think of it this way. AI poisoning would be like a bad uncle teaching your toddler how to swear. The kid doesn’t know the context, so they’ll say those words because they seem fun. Yet, the consequences will be dire when your kid blurts out a swear word when in public.

For cybersecurity, AI poisoning is a huge issue, because you’re basically giving a two-year-old control over your security analytics. If it was through that certain data is not malware and that it should not only allow it but hide it, you can only hope that you will find out before the public does.

AI Poisoning is More Psychology than Computing

To understand the issue behind AI poisoning, we need to understand machine learning and the issue with the data it has. Compared to regular human senses (vision, smell, and touch), AI is ‘’fed’’ pictures from which it should discern what an object is.

Now, onto the issues. First, an image is just a mix of pixels with different values, so it offers very little information compared to a human experience. When you see a dog, you see the size, shape, movement, smell, and sound together with the color and features. Then, you’ll ‘’file’’ all of that under the same category.

Now, imagine everyone tells you that dogs and cats are the same. At some point, you’d internalize that information and simply go with it. That’s how we have different languages.

Not to mention that (most) humans can’t easily read digital data strings and recognize them as true or false. That means they can’t always sort through lines of code to differentiate between right and wrong information.

Yet, the AI gets so little information from each source and doesn’t disregard anomalies the same way as humans. That means we can make a huge impact with just a bit of mislabeled data! We can make the AI come to the wrong conclusion once asked.

When using open-source data, a hacker can discreetly insert wrong information that the human brain won’t detect as malicious. Humans would disregard that info, but AI will internalize it. Then, it’ll also allow loopholes for software, or even face recognition.

Companies Are Not Ready for This

By 2028, predictions point to the global AI cybersecurity market reaching $35 billion. Companies and governments are using AI for everything–from loan processing to facial recognition. Yet, they aren’t ready to ‘’educate’’ the AI they’re using.

Even in huge corporations, the examples they can give to cybersecurity and malware AI will be severely limited. These examples will also need to rely upon the open-source catalogs. Why is this bad? It’s like letting your child receive exclusive education from the internet. Not a good idea.

To ensure that the AI will come to the right conclusion, you need to be certain that the received data is clean and correct. Some solutions, such as the one proposed by OpenAI LLP, will pass all data through filters to curate it before reaching the AI.

This AI cybersecurity will also have a spot in all future cybersecurity strategies. That will include constantly teaching and updating the AI to recognize new threats and how to react to them.

Still, as we know from parental filters, workarounds always exist. The only benefit is that AI isn’t naturally curious like people, so you can also hardcode it to allow or disallow certain information. However, that’ll make the machine learning process more costly and time-consuming.

The Future of AI Education

It might sound ridiculous from the current perspective, but we need to find a way to deeply educate AI connected to a wider system.

Preparing the AI in an enclosed system before releasing it to the world wide web is just as important as educating humans. Yet, we need to be more careful with AI. AI will have more access, will work much faster, and won’t really be able to mature and change the same way humans do.

Not to mention that we aren’t even sure if we ever want AI to mature! Many companies and enthusiasts believe that AI is a profitable source that’ll benefit humanity. Yet, some of us have seen one too many Sci-Fi movies and know that this leads to The Singularity.

Some also argue that the tipping point is already here, but if that’s true, the discussion wouldn’t be about cybersecurity anymore. Instead, it’ll shift focus to human rights, and these discussions seem closer than we’d like to imagine.