Security is constantly evolving, especially in today’s fast-paced technologies. It is stated as one of the main reasons companies don’t fully adapt to the cloud, even though many cloud services provide more security than traditional servers ever could.

Of course, Amazon Web Services can be a very secure or a very dangerous environment, depending on the measures you take. While AWS implements many new security measures, it is still quite possible to hack someone’s account.

So, there are some AWS security best practices that you should follow to help make sure that your account remains secure.

Whose responsibility is it?

Should you or Amazon bear the primary responsibility for your AWS account’s security? Well, like almost all electronics, both you and the vendor should do everything in your power to stay secure.

AWS released a white paper (PDF) regarding security best practices, and it in they discuss their “shared responsibility model.”

The name is self-explanatory, but they essentially outline the fact that AWS does its part by providing “a global secure infrastructure and foundation compute, storage, networking and database services, as well as higher level services.” They also offer additional services and features related to security that customers can take advantage of.

Yet, some responsibility also lies with the customer to perform AWS security best practices, of course. As it’s written in their white paper, “AWS customers are responsible for protecting the confidentiality, integrity, and availability of their data in the cloud, and for meeting specific business requirements for information protection.”

AWS security best practices

-

Use CloudTrail and tighten the configurations

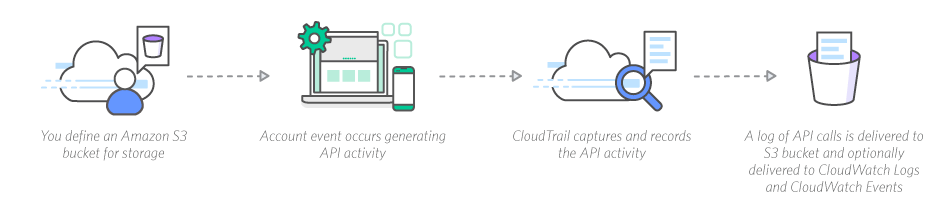

CloudTrail is a great tool offered by AWS to track user activity and API usage. Using it allows you to log, continuously monitor, and retain events so you understand exactly what is happening related to API calls throughout your AWS infrastructure.

The log files are stored in an S3 bucket, and you can easily see if there are any unusual API calls, including those made through the AWS Management Console, AWS SDKs, command line tools, and other AWS services.

However, just using CloudTrail with its typical configurations isn’t enough for your AWS security best practices. This is because the log files can be deleted by any attacker who gains access to your account. To protect yourself, you can change a few things.

The first step is to require multifactor authentication to delete the S3 buckets. This way, the hackers cannot simply delete your logs. Also, you can choose to encrypt all the log files, both in flight and at rest.

You can also turn on CloudTrail log file validation to better track any changes and set up your CloudTrail S3 bucket so you can have a log of everyone who requests access, making it easier to identify anything unusual. Lastly, make sure CloudTrail is tracking across every geographic region so there aren’t any blind spots.

This real-time monitoring tool should also be paired with reducing the number of root account permissions you use. Then, you can set up an alarm any time someone attempts to log in using root attempts.

The tools provided by Amazon greatly assist in implementing AWS security best practices, yet you should also consider investing in application-monitoring tools that are able to analyze all of your data, including the log event streams. These security analytics can help you spot suspicious behavior before it’s too late.

-

Take advantage of Identity and Access Management and multifactor identification

[tg_youtube video_id=”Du478i9O_mc”]

This one might seem too obvious to mention, but it’s important and often overlooked when thinking about AWS security best practices. Any account that has a console password should have the time-sensitive MFA enabled.

You should also use AWS’s Identity and Access Management (IAM) in a way that’s best for your security. IAM gives you greater control by allowing you to manage access to all services and resources for your users or groups. There are a number of best practices that are outlined by AWS.

For example, when using IAM, you should attach the policies to groups or roles, not particular individuals. This helps mitigate against a user accidentally gaining permissions or privileges you don’t want them to have.

Using IAM, you have the control to offer minimal access privileges, as well as provision access to a resource rather than a set of credentials. Both of these practices will help protect your resources immensely.

As mentioned before, make sure each IAM user has the multifactor authentication activated to keep out malicious attempts to gain access to your account. To do this, simply open the IAM console and select “users.” Each user with this enabled with have a checkmark in the “MFA Device” column.

You can also choose to use IAM to deny access to a user’s AWS account until they have successfully completed the MFA setup.

Lastly, make sure you have a strong password policy enforced, passwords that must be changed after a certain number of days, and regularly rotate IAM access keys.

-

Know your databases

With Amazon, you have the option of using a number of different databases, such as Amazon RDS, DynamoDB, or ElastiCache. You can also use Amazon’s own S3 services or Elastic Block Store (EBS).

How can you make sure your data stays secure in a database or data storage? AWS security best practices regarding databases should focus on encryption. If using EBS or Amazon relational database services (RDS), make sure to encrypt your data if they aren’t already encrypted at the storage level.

If using Redshift, you should turn on its “audit logging in order to support auditing and post-incident forensic investigations for a given database.” Also, lower your risk of a man-in-the-middle attack in Redshift by enabling require_ssl parameter in all of your clusters.

Also, don’t allow easy access to any RDS instances. This helps protect you against things like brute force or DoS attacks.

-

Make sure your virtual private clouds are secure

Your virtual infrastructure should be protected. One way to do this, suggested by DZone, is to modify the default VPS offering. You would modify it by “splitting each availability zone into a public subnet and a private subnet.”

There are numerous ways to perform this action, but the simplest option is to create NAT gateways directly through your VPC. AWS can perform automatic updating to routing tables and generate one VPC NAT gateway per subnet. AWS will also automatically allocate and assign elastic IPs for each new gateway.

If you don’t want AWS automatically doing this for you, you can also create your own NAT instances.

Anything else?

As AWS offers so many different possibilities, it also has a plethora of security best practices. These practices outlined above are extremely useful and necessary for general users. However, if you’d like information on more specific practices you can use to make your security top-of-the-line, check out AWS’s own white paper on the subject.

Photo credit: Pixabay