Introduction

In a simplified scenario the tasks that a computer does are broken down into mathematical operations which are performed by the computer’s CPU. For this reason, the CPU needs to be a workhorse that is good at all types of mathematical operations so that all tasks receive good performance. Even in computers with multiple cores in the CPU, the cores are still “generic” and not optimized for certain complex operations.

Most of this work is performed within the Arithmetic Logic Unit (ALU). The ALU is what allows the computer to add, subtract and to perform basic logical operations such as AND/OR. These operations are performed using logic gates, and by combining the logic gates in interesting and complex way the CPU is able to accomplish complex calculations. However, because the CPU does not know the operations being sent to it before hand it has to focus on providing good building blocks which will allow for virtually any type of calculation.

Figure 1: A full adder diagram. This is what allows an ALU to add values together.

But can we do better? What if we took certain tasks with complex mathematical operations and instead of sending the operations to the CPU send them to some specialized hardware built specifically to complete these operations quickly and efficiently? This is hardware acceleration. While hardware acceleration can be done via specialized processors like a GPU, they can also be accomplished with field programmable gate arrays (FPGAs) for more specialized scenarios.

The Graphical Example

One of the most common examples of hardware acceleration is seen in graphics processing units or GPUs. Modern computers perform a tremendous amount of graphical tasks. From video conferencing to gaming and to animations built into the OS, great performance is dependent on great graphics processing capabilities.

The GPU is designed specifically for the mathematical operations required for advanced capabilities. One of these advanced capabilities available in most graphic cards is called anti aliasing. In this context, anti aliasing will smooth out the graphics and make an image look true. Although correct, this explanation doesn’t really do anti-aliasing justice. Within the realm of digital signal processing, anti-aliasing is a well known (and often used) technique. So even though the GPU was designed for graphics processing we could use it for any operation that requires anti-aliasing.

Hopefully, our GPU supports a standard API like OpenGL or Direct3d. Direct3d is a Microsoft proprietary API compatible with Windows operating systems (and the XBox) while OpenGL is an open, cross-language, cross-platform API. Both of these APIs expose the two dimensional and three dimensional mathematical operations of GPUs and allow software developers to easily utilize advanced capabilities. If it does, then a programmer could use this API to send anti-aliasing operations to the GPU. This would not only speed up our graphics processing, but also any digital signal processing as well.

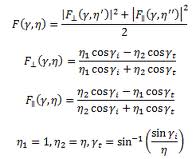

Another example of complex mathematical operations that a GPU is optimized for is Fresnel Equations. Fresnel equations describe the reflection of light off of different surfaces. GPUs have capabilities to process these equations quickly and efficiently. Many modern operating systems like OS-X and Windows 7 have reflections throughout the OS. On computers that have a GPU these calculations are hardware accelerated which provides better performance and frees the CPU to do other calculations.

Figure 2: Fresnel equations. Significantly more complex than addition.

Regular Expressions

One area of hardware acceleration of particular interest in the networking context is hardware acceleration for the calculation of regular expressions. Spam detection is one area that uses regular expressions quite extensively. A spam detection engine will run many rules (possibly thousands) against each incoming mail message to determine if it is spam or legitimate; most of these rules contain regular expressions.

A regular expression is a way to match strings of text. They are written with a special syntax that a compiler would understand. It has symbols to match wild card characters, the beginning of a line, the end of a line, etc. In the context of spam filtering if you wanted to write a rule were all mail from any GMail account is considered spam you could write something like .*@gmail.com, where the period would match any character and the asterisk would look for any number of characters. Alternatively, if you wanted to match any email that had a letter A through H immediately before the @ symbol you could write something like .*[a-h]@gmail.com, where the square brackets will match any character that matches what’s in the square brackets – in this case a through h inclusively.

Regular expressions are known to be quite computationally expensive. With spam detection running many regular expressions over every single mail message received the computational cost is immense. Hardware acceleration can significantly speedup the calculation of these regular expressions and free the CPU to do other things.

Compression

Another area of hardware acceleration of particular interest in an enterprise network context is hardware acceleration for data compression. For an enterprise network, faster compression via hardware acceleration can be beneficial for in a couple of scenarios.

First, let’s say you have a distributed network which transfers large amounts of data across large spans – perhaps you have an office in New York, and another in Los Angeles. If you’re transferring a lot of data it may take a long time, or perhaps it’s quite expensive. Compression will allow you to send less data which could then be decompressed at the receiving end to form the original data. This will definitely reduce the amount of data sent, but it is also time consuming to compress large amounts of data. Specialized hardware designed to compress data would save a significant amount of time. With the proper hardware setup and applications this could all happen transparently to the user.

Secondly, if you’re running an enterprise network you should be backing up much of the data on your network. This can take up a significant amount of space and while storage space is relatively inexpensive, it’s always a good idea to use it to its capacity. Compression will allow you to store more data on the same amount of storage space. Having hardware acceleration for frequent compression and backups will provide significantly improved performance.

Hardware acceleration seems to becoming more and more popular. This is similar to how multi-core processors are becoming more and more popular. My opinion is that as the number of cores on a computer move into the dozens, we’ll see certain cores optimized for specialized complex mathematical operations like those I’ve described above. With cores like this present on the computer the OS would then recognize these complex operations and send them to those cores rather than the “generic” cores. This would significantly increase performance (above and beyond the performance you’ll see with dozens of cores!). It would also add some interesting options when purchasing computers as there could be some with specialized cores for graphics editing, for gaming, for programming, etc. Ideally a user would be able to request which optimized cores would be in a computer so as to customize the computer to their specific needs.