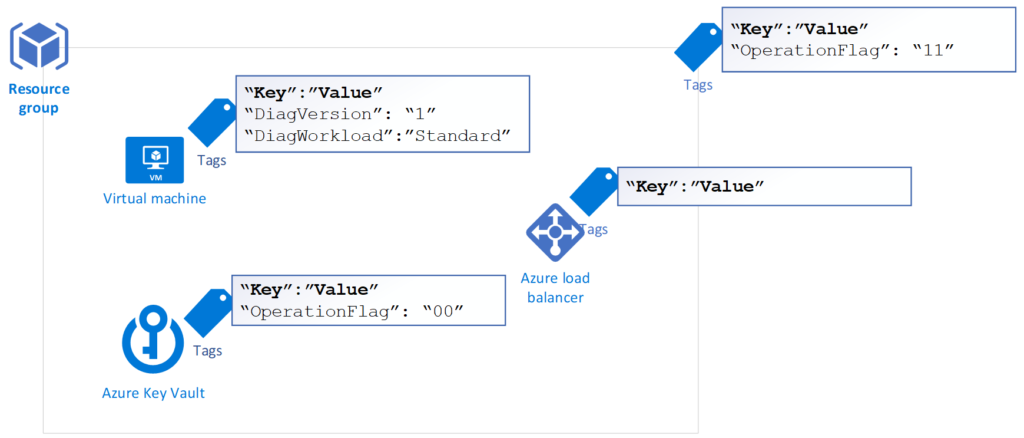

In a previous article, we went over the architecture of a runbook that we created to assign diagnostic settings to resources in Microsoft Azure. This Azure infrastructure solution can be summarized in the screenshot below, where tags at the resource group level would force the diagnostic setting on all resources within. But if the resource itself has the same tags but different values, then it would override the parent setting. Our focus in the previous article was around the functionalities and the architecture of the solution. (You can find the original script here.) In this article, we will cover some key points of the script responsible to execute the planned solution.

Supporting files

The idea behind this script came from a script that I created in 2016 to assign mailbox profiles in Exchange Server 2016. The goal was to create a script that does not require all the cmdlets to be within the script. Instead, the script would read an answer file and use that information against the mailbox/resources.

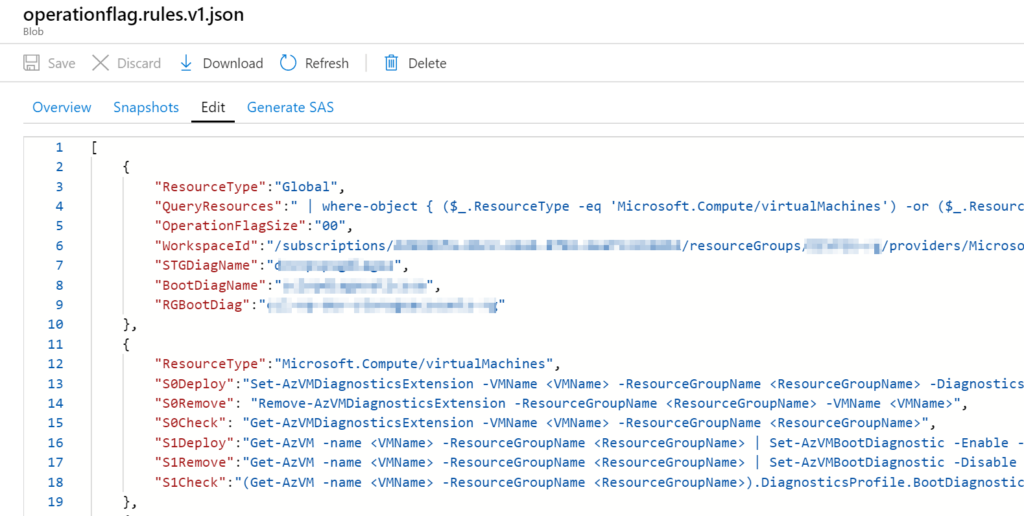

In this new cloud version of the script, we will use a JSON file to keep all cmdlets and global settings that may be required during execution time in a Storage Account. The JSON file has a control version on its name, and the first version is called operationflag.rules.v1.json.

All global variables are defined in the first {} of the JSON. For now, we are keeping information such as QueryResources (which is the query to find the resources that we have on this file) and all other information that can be shared in this area.

For any given resource, we need to start by defining the ResourceType, and the first one is Microsoft.Compute/virtualMachines (aka VMs), and then we have commands for every bit for that resource, and they have a prefix S<Integer>, where Integer is the bit position. Our script has to check for the feature, add or remove based on the bit defined on the resource group or resource. Thus, we need to have all possible actions documented in our JSON file.

For example, the first bit when we are talking about VMs is responsible for managing diagnostic settings, thus the Set-AZVMDiagnosticsExtension cmdlet. When the script wants to check if that feature is enabled, it will consume the S0Check. If the feature has to be activated, then S0Deploy will be used, and the same applies to S0Remove when the feature has to be removed.

The script was created to be dynamic, and it will be applied to any given number of VMs. We used <String> to be replaced during the execution time. If we need to specify a boot diagnostic Storage Account name, we will use the item from the Global setting. Thus we should reference that as <BootDiagName>.

A function in the script will find those special place-holders (<>) and replace them for the execution time resources and global variables.

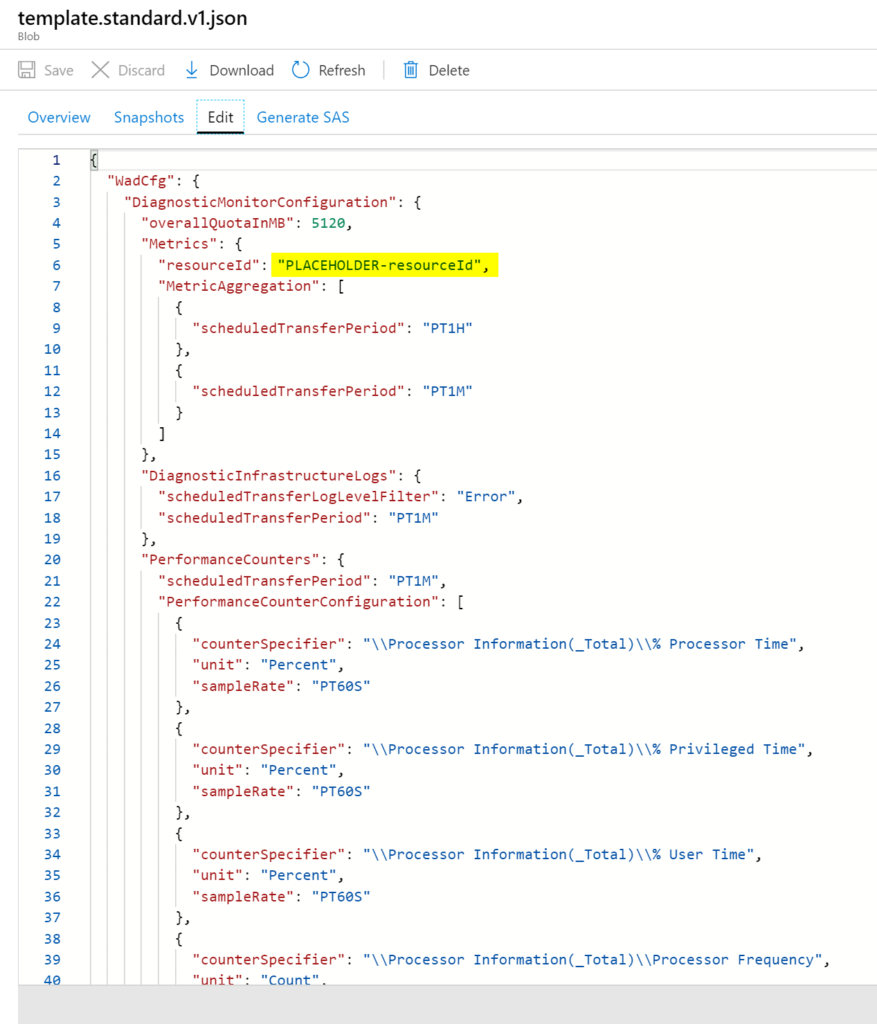

The second file is used just for virtual machines, and it has a JSON file to configure the diagnostic settings in a VM. There is a trick here. The file should be unique for every single VM that we want to enable because it requires the ResourceID of the given VM within the configuration file.

We overcome this issue using PLACEHOLDER-resourceId string in the configuration file, during the script execution time, it will replace that string for the actual ResourceId and then enable diagnostic settings accordingly.

Understanding the functions in the script

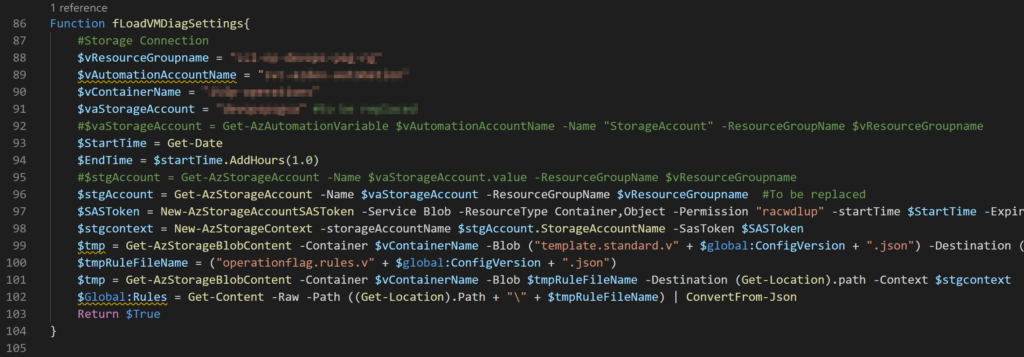

The first function that is worth mentioning is fLoadVMDiagSettings. This function will connect to a Storage Account and a specific container to download the JSON files (rules and VM diagnostics) to the local machine that is running the script. All the rules are loaded into the $Global:Rules variable.

We can see the use of the Global Rules in action on the first lines of the script where we are going to fetch all resource types, and they come from the QueryResources item in the JSON file, as listed in the code below.

$Resources = Invoke-Expression ("Get-AzResource -ResourceGroupName RG-MSLab " + $Rules[0].QueryResources)

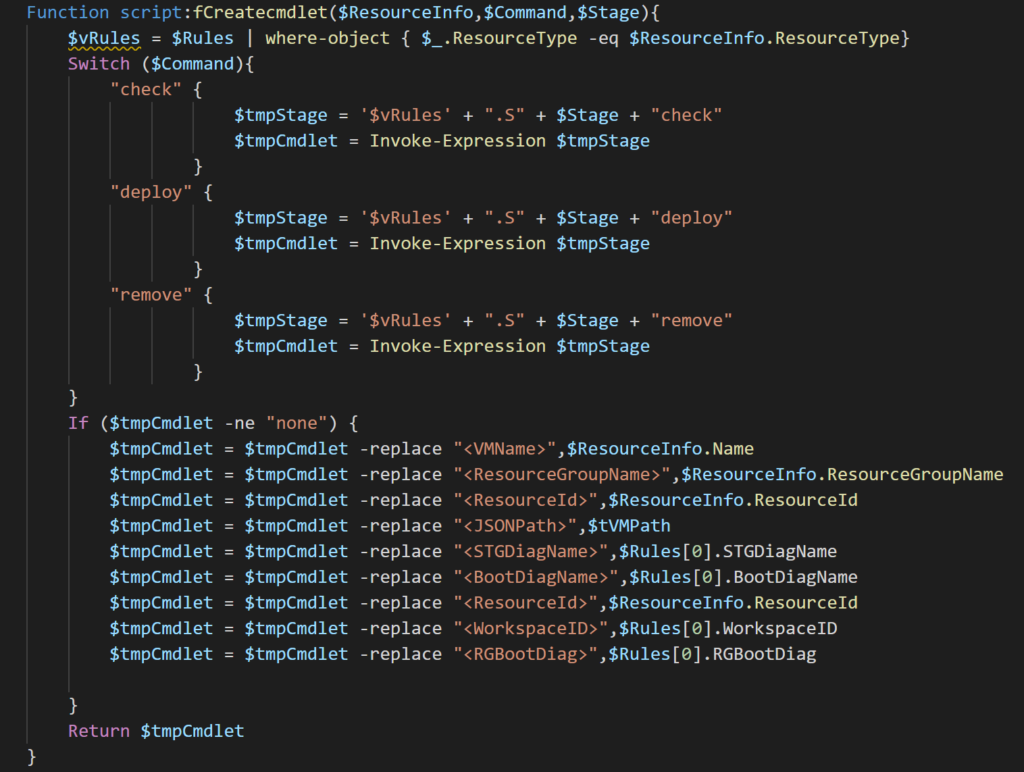

The function fCreatecmdlet is the one that creates all cmdlets that will be executed during runtime. First, it receives the action (check, deploy or remove), the resource in question information, and the position of the bit of the OperationFlag.

The first block of the script will concatenate a lot of information to retrieve the cmdlet that we need to execute, but keep in mind that they will come with <ExpressionName> from the JSON file. We solve the issue by replacing those placeholders by the actual value, most of them come from the JSON files (all of those that have $Rules[0].something are coming from the Global section of our JSON file).

Note: If you introduce new placeholders, this function must be updated accordingly.

The function fTagAssessment is responsible for creating the Tags when they do not exist, or retrieve the tags from the resource group (if required), and perform some validation. It will return the current OperationFlag of any given resource.

The function fQuickCheck is critical for the performance of the script. It will evaluate if the current OperationFlag is being enforced at the resource level. If any given feature must be removed, it will stamp a number 6, if it requires deployment, then a number 1 will be stamped, and if it does not apply, then an x will be used.

The function Phase1 is responsible for consuming the current OperationFlag. The OperationFlag being used by this function is the comparison between the configuration at Resource Group/Resource and the actual value configured at the resource level. (By the way, I agree the name is horrible! The reason for this name is that initially, the script was planned to run a function for each bit in the OperationFlag.)

The logic behind this Azure infrastructure code

The idea of introducing the reasons behind the code of the script is to help the cloud administrators who want to keep reusing the code of this article and add new capabilities. The goal is to work as little as possible in the code and rely more on the JSON files to provide the cmdlets required during the execution time. The logic behind this code can be used in several areas, and OperationFlag is just an idea of how to take advantage of code using JSON and Storage Accounts to create a dynamic environment. If you are excited as I am about Azure DevOps, you could trigger a storage copy of the file after it is committed to a repo.

Featured image: Shutterstock