In this day and age of fake news, photoshopped pictures, and tech support scam calls, one can’t help but to wonder sometimes what and who can actually be trusted. There are certain things that throughout our history have been considered to be trustworthy. In a criminal trial for example, it is relatively common for photographs, video footage, and documents to be presented as evidence. Sadly, recent advancements in artificial intelligence have made it so that we now have to be far more skeptical about the authenticity of documents and multimedia content. As AI gets better and is used by more people, AI fakes may become a huge problem.

The idea that a picture can be photoshopped is certainly nothing new. After all, Photoshop was first released in 1990, and we have all had plenty of time to get used to the idea that photographs can be digitally altered. Sometimes this is done for comedic effect, such as is the case for a particularly infamous picture of Hitler taking a selfie with his iPhone. In other cases though, Photoshop is used in an attempt to deceive people, such as was the case with these famous fakes.Thankfully, it’s usually pretty easy to spot a photoshopped image. Even so Photoshop is sophisticated enough that in the right hands it can create some convincing images.

This raises a critically important point. Photoshop is only as good as the person who is using it. A person who is lacking in artistic talent and technical know-how is not going to be able to create a convincing fake image. The same cannot be said, however, for AI. A properly trained AI engine can synthetically create fake images that look completely real.

A perfect example of AI fakes

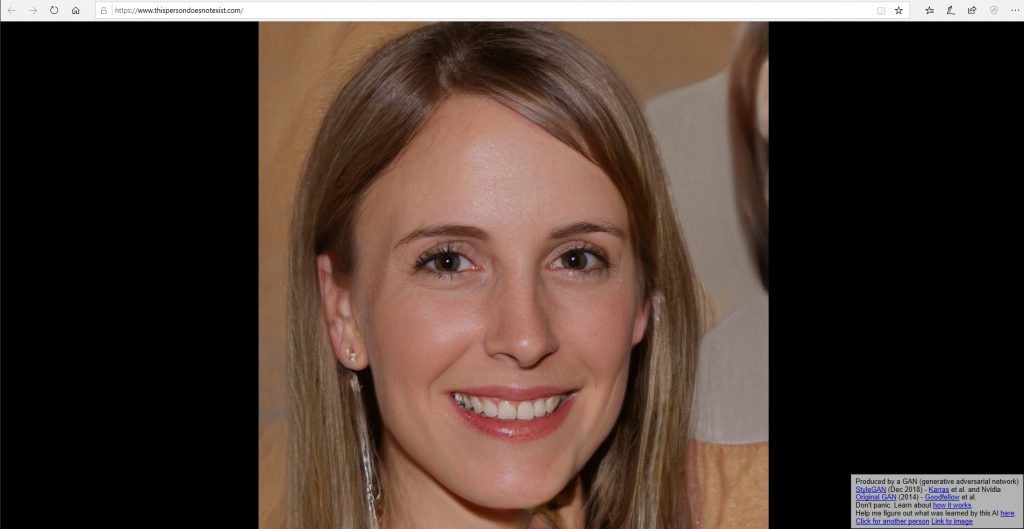

The best example that I have seen of this is a website called This Person Does Not Exist. The website, an example of which you can see in the screenshot below, uses AI to generate portraits of people who do not actually exist. Every time that you refresh the browser, the AI engine displays another fake portrait.

This person does not actually exist. The photo was created by an AI engine. It is a great example of AI fakes that look real.

The thing that I found so interesting about This Person Does Not Exist is that the AI engine is actually producing more than just a human face. If you take the time to look at a few different portraits, you will notice that each one has a unique background. In addition, the AI engine is smart enough to put in other details such as jewelry, glasses, varying clothing and hairstyles, different poses, and on occasion, even minor defects. For example, the AI engine will occasionally generate a portrait of someone who has a facial scar, acne, a minor dental problem, or a band aid. Some of the computer generated portraits look like professional models, while others look like drug addicts. There are images of elderly people, and children. Some of the fake people look happy, others look sad.

While it absolutely blows my mind that an AI engine is able to produce such realistic and yet widely varied photographic fakes, I can’t help but to wonder about the implications that the technology holds. Photoshop got people in the habit of questioning whether or not a photograph is real, but the technology used by This Person Does Not Exist could eventually lead people to take a “fake until proven real” stance toward photos.

As impressive as This Person Does Not Exist may be, it is not the only example of computers being used to generate realistic photographs. A few years ago, Nvidia set out to determine whether or not the Apollo moon landings were real. The company created a photorealistic digital model of the Apollo 11 landing site, and used ray tracing while trying to replicate some of the more iconic photographs in order to determine whether or not those photographs had been faked. Spoiler alert: The moon landings were real.

What about video?

Although various forms of photographic fakery have existed for what seems like forever, video has historically been considered to be more trustworthy. Sure, Hollywood can fake almost anything, but for the average person it is much easier to fake a photograph than to fake a video. Thanks to AI and other technologies however, video is also becoming completely untrustworthy.

The degree to which videos could be faked was brought to light in early 2018 when Pornhub received national media attention for its announcement that it was banning deep fakes. The term Deep Fakes (at least as Pornhub uses it) refers to the practice of using an AI engine to replace a porn star’s face with the face of a celebrity.

Although the porn industry is known for pioneering the use of new technology, deep fakes are by no means limited to porn. Back in 2018, BuzzFeed released a video of Barack Obama saying some things that I am guessing he would probably never say in real life. The video looks authentic, but the person who created the video was not able to accurately imitate Obama’s voice. Therefore, anyone who watches the video will immediately recognize that it is a fake.

However, AI might have a solution for that as well. A company called Lyrebird claims to be able to take a sample of your voice and then use AI to create a text to speech engine that sounds like you. The company created voice avatars of both Trump and Obama. The sound quality isn’t quite good enough to fool anyone, but I can only imagine what might be possible in the next couple of years.

AI-based forgeries and AI fakes are not limited solely to pictures, video, and audio. AI can even be used to generate documents that are written in an author’s own unique style.

As an author, I personally find that technology to be absolutely terrifying (nobody wants to be replaced by a machine). Fortunately, the project’s creator also finds it to be a little bit scary. In fact, the group that created the technology has refused to release it to the world out of concern for how it might be used.

In a demo the technology, which is called GPT-2, analyzes a single paragraph. It can then create several additional paragraphs of text, essentially completing the story. This machine generated text perfectly mimics the original author. While my most immediate concern is obviously that of being put out of work by an AI, just imagine all of the ways that this sort of technology could potentially be abused. A dubious news outlet might use this AI engine to create a never-ending stream of fake news. A lazy employer might leave employee performance reviews to be written by AI. The technology could even take document forgery to the next level.

AI fakes could do real damage

Any one of the technologies that I have described could be abused to the point of doing irreparable harm to someone. In most cases however, technology is not siloed. Multiple AI technologies could be brought together to create a very compelling fraud. Going forward, the IT industry will have to come up with a way of allowing people to distinguish between legitimate items and AI fakes. Digital cameras of the future might, for example, be designed to digitally sign photographs as a way of proving that a photograph has not been tampered with.

Featured image: Shutterstock