I write a lot of PowerShell scripts. Some of the scripts that I develop are for one-time tasks, while others get run on a daily basis. Whenever I build a script that is going to be run frequently, I like to build in a PowerShell script logging mechanism so that I can go back later on and make sure that the script has been doing what it is supposed to be doing. However, logging presents challenges of its own.

For one thing, there is the question of how best to log the data. Some people prefer to use the Start-Transcript cmdlet, while others prefer to use Out-File or something else. In my opinion, there is no one-size-fits-all logging solution. Every script is different, and so when it comes to PowerShell script logging, it’s best to output the data using whatever method makes the most sense for the task at hand.

The topic of which method should be used to log data has been hotly debated for some time now. However, there is one aspect of the PowerShell script logging discussion that is often overlooked — log proliferation. The problem with building logging capabilities into frequently run scripts is that those scripts can over time, generate an overwhelming volume of log data. If the logs are being appended to an existing log file, you can eventually end up with a huge file. If you are creating a separate log file each time the script is run, then you can end up with a whole lot of log files.

My advice is that whenever possible, you should write logging data to a unique file. By doing so, you can also automate the removal of old log files. Here’s how it works.

Creating unique file names

The first thing that you will need to do is to come up with a way of generating unique log file names. There are many different ways of accomplishing this. If there is a possibility that your script could run more than once a day, then you might consider basing the output file name on a random number. For scripts that run once a day, however, the easiest thing to do is to base the filename on the date.

For the purposes of this example, let’s pretend that I want to create a daily log for a particular PowerShell script. If this were a real-life situation, I would probably create output files that reflected the script name (so that I would know which script created the log), and the current date. For the sake of example, I am going to use a short form version of the date, but if you are concerned that there is a chance that your script could be run more than once a day, you can incorporate the time into the filename.

Here is how I would create such a filename:

$RootName = ‘Log ‘

$LogDate = Get-Date -Format d

$FileName = $RootName + $LogDate + ‘.txt’

The first line of code sets up a variable named $RootName, and assigns it a string of text that simply says Log. This is where you might use the name of your script if you were running this for real.

The second line of code assigns the current date to a variable named $LogDate. There are two things that are worth mentioning about this. First, you will notice that I have used the -Format parameter, followed by a lower case D. This tells PowerShell to use a short form representation of the date. You can find the available formatting options here.

The other thing worth mentioning is that it is also possible to use the $LogDate variable to write the date to your log file contents.

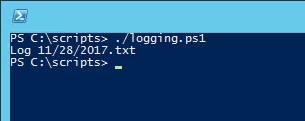

The last line of code creates a variable named $Filename that is based on the $RootName and $LogDate variables. You will also notice that I have appended a .txt extension to the filename. I have added these three lines of code to a script, along with a line that outputs the filename. You can see the results in the figure below.

Deleting old logs

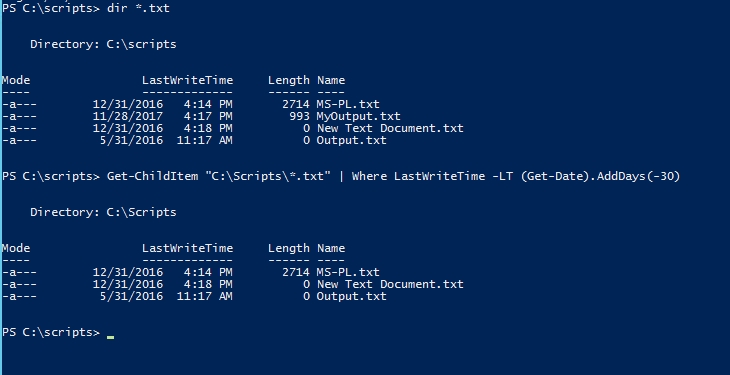

As you can see, it is relatively easy to create log files whose filenames are based on the date, but what about deleting old log files? If your script is running on a regular basis anyway, then you can add a few lines of code to delete old log files. Let’s assume for example, that your log files reside in a folder named C:\Logs, and you want to delete those log files after 30 days. You could identify the old log files by using this command:

Get-ChildItem “C:\Logs\*.txt” | Where LastWriteTime -LT (Get-Date).AddDays(-30)

The Get-ChildItem portion of the command retrieves the contents of a particular file folder. In this case, it is retrieving the .TXT files in the C:\Logs folder. The WhereLastWriteTime portion of the command instructs PowerShell to filter the list of files based on when the file was last modified. For this situation, we are using a date filter. The Get-Date cmdlet retrieves the current date. AddDays is normally used to calculate a date in the future, but since I specified a negative number (-30) the command is looking at a day in the past. In other words, Get-ChildItem is retrieving a list of the .TXT files in the C:\Logs folder that were last written more than 30 days ago (the -LT operator means “less than” and indicates that we want to look for files older than the specified date range).

If you look at the figure below, you can see that I have used the Dir command to retrieve a list of all of the text files in the current folder. I then used the command listed above to show which of these text files are more than 30 days old. If I wanted to automate the deletion of these files, I could use the Remove-Item cmdlet.

Staying organized

A big part of PowerShell script logging is creating log files in an organized way, and deleting old log files that are no longer needed. Fortunately, PowerShell makes it very easy to do both. In fact, you may have even noticed that when I identified the old log files, the command that I used was based on the file’s LastWriteTime attribute, not the filename. This method will work, even if the filename was not based on the creation date.

My Windows 10 system does NOT like the slashes in the date (MM/dd/yyyy) when I try to create a file based on ‘-Format d’. I prefer to use something like

$LogDate = get-date -Format yyyyMMdd

which would set $LogDate to 20180213 – this would create files where an alphabetical sorting on the names would also be a chronological sort.

Good article.

My preferred approach is to build a logger as a filter so that I can pipe stuff through it if needed:

Filter Do-Log {(Get-Date).ToString(“yyyyMMdd_hhmmss_fff”) + “: ” + $_ | Tee-Object -FilePath $logFile -Append}

(where $logFile is a global variable set at the beginning of the script).

Usage:

“Some stuff to be logged” | Do-Log

Hey Piotr, that’s a cool idea! Thanks for sharing.

Piotr.. Yes thank you for sharing.

I added the | Out-Null in case you do not want the string to show on the screen:

Filter Do-Log {(Get-Date).ToString(“yyyyMMdd_hhmmss_fff”) + “: ” + $_ | Tee-Object -FilePath $logFile -Append | Out-Null}

i get this error, how come ?

Do-Log : The term ‘Do-Log’ is not recognized as the name of a cmdlet, function, script

file, or operable program. Check the spelling of the name, or if a path was included,

verify that the path is correct and try again.

At C:\Users\seng\Desktop\teams_script\TeamsChecker.ps1:71 char:20

+ StartProcess | Do-Log {(Get-Date).ToString(“yyyyMMdd_hhmmss_fff”) …

+ ~~~~~~

+ CategoryInfo : ObjectNotFound: (Do-Log:String) [], CommandNotFoundExcept

ion

+ FullyQualifiedErrorId : CommandNotFoundException