If you would like to read the other parts of this article series please go to:

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 1)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 2)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 3)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 4)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 5)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 6)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 7)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 8)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 9)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 10)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 11)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 13)

Introduction

In part 11 of this multi-part article, we simulated a Client Access server failure and saw how Exchange clients were affected by this type of failover. Then we initiated a database switchover of all databases on EX01 to a server in the failover datacenter and observed how this affects the Exchange clients.

In this part 12, I’ll simulate a datacenter failure and take you through the steps necessary to activate the Exchange services in the failover datacenter. We’ll also look at how a datacenter switchover affects our Exchange clients.

Simulating a Complete Site Failure

Let begin with the easy part which is to fail the primary datacenter. Since all the servers in our test environment are Hyper-V based virtual machines, I can simulate the datacenter failure by turning off all the servers in the primary datacenter. Before we take down the primary datacenter though, let’s have a quick look at the servers we have en each datacenter.

We have the following servers in the primary datacenter:

- DC01 This is the Domain Controller (yes there should be at least 2 in a production environment)

- EX01 This is an Exchange 2010 multi-role server which participates in the stretched DAG

- EX03 This is an Exchange 2010 multi-role server which participates in the stretched DAG

- FS01 This is a file server which also acts as primary witness server for the stretched DAG

In the failover datacenter:

- DC02 This is the Domain Controller (yes there should be at least 2 in a production environment)

- EX02 This is an Exchange 2010 multi-role server which participates in the stretched DAG

- EX04 This is an Exchange 2010 multi-role server which participates in the stretched DAG

- FS02 This is a file server which also acts as alternate witness server for the stretched DAG

As you also may recall, we have 12 databases in the DAG and the active copies are distributed across EX01 and EX03 in the primary datacenter as shown in Figure 1.

Figure 1: Databases in our DAG

We have a dedicated load balancing solution in each datacenter. The load balancer in the primary datacenter load balance client access and inbound SMTP traffic across server “EX01” and “EX03” and the load balancer in the failover datacenter load balance this traffic across server “EX02” and “EX04”.

To fail the primary datacenter, I simply mark all the servers in the primary datacenter in the Hyper-V Manager and then right-click to bring up the context menu. Here, I select “Turn Off” and and after a few seconds our primary datacenter is totally dead.

Figure 2: Turning off virtual machines in the primary datacenter

To simulate a failure of the load balancer solution in the primary datacenter, I’ll disable all the Exchange related virtual services (Figure 3).

Figure 3: Disabling the virtual services on the load balancer in the primary datacenter

Okay so what will happen now? Outlook clients will be disconnected and other Exchange services will now be inaccessible to the end-users.

Figure 4: Outlook clients disconnected

From the Exchange administrator perspective, we’ll see that the databases will go into a status “Service Down” for server “EX01” as well as “EX03” and “Disconnected and Healthy” for server “EX02” and “EX04”. They will be automatically activated on “EX02” and “EX04” in the failover datacenter. Why is that? We have two out of four DAG member servers plus the witness server down in the primary datacenter and because of this two DAG member servers in the failover datacenter cannot achieve quorum.

Figure 5: All databases are dismounted because quorum cannot be achieved in the failover datacenter

Preparing for Restoring Service in the Failover Datacenter

So how do we restore services in the failover datacenter? Well the procedure is actually pretty straightforward. The very first thing we need to do is to make sure the DAG member servers in the primary datacenter (EX01 and EX03) are marked as stopped in the DAG. This is achieved using the Stop-DatabaseAvailbilityGroup cmdlet which can be run against each individual DAG member server (using the “MailboxServer” parameter) or against all DAG member servers in the primary datacenter (using the “ActiveDirectorySite” parameter).

Now because all servers in the primary datacenter are down, we obviously cannot run the cmdlet in the primary datacenter. However, if we were dealing with a partitially failed primary datacenter where both the domain controllers and DAG member servers were still available in some degree, we would need to run the following cmdlet in the primary datacenter:

Stop-DatabaseAvailbilityGroup DAG01 –ActiveDirectorySite Datacenter-1

The above cmdlet should be used if both the DAG member servers and domain controllers are available. You could also use Stop-DatabaseAvailbilityGroup DAG01 –MailboxServer EX01 and Stop-DatabaseAvailbilityGroup DAG01 –MailboxServer EX03 respectively.

If only the domain controllers are available in the primary datacenter, we would need to run the cmdlet with the “ConfigurationOnly” parameter:

Stop-DatabaseAvailbilityGroup DAG01 –ActiveDirectorySite Datacenter-1 -ConfiguratonOnly

When all servers in the primary datacenter are down (which is the case here), we can skip the above step and move on to the next one, which is to run the same cmdlet in the failover datacenter (again we use the “ConfigurationOnly” parameter since the servers in the primary datacenter aren’t available):

Stop-DatabaseAvailbilityGroup DAG01 –ActiveDirectorySite Datacenter-1 –ConfiguratonOnly

Figure 6: Stopping the DAG member Servers in the primary datacenter

After we have run the above command, we can verify the DAG member servers in the primary datacenter are in a stopped state using the following command:

Get-DatabaseAvailabilityGroup DAG01 | fl Name,StoppedMailboxServers,StartedMailboxServers

Figure 7: Verifying the DAG member servers in the primary datacenter are marked as stopped

That was the preparation step we needed to go through before we activate the DAG member servers in the failover datacenter. The reason why it’s this simple is because the DAG is running in DAC mode. If the DAG hadn’t been DAC enabled, we also had to go through a set of Windows Failover Cluster specific commands. These are however outside the scope of this multi-part article.

Restoring Service in the Failover Datacenter

We’re now ready to restore service in the failover datacenter.

The first thing we must do to reach this goal is to stop the cluster service on each DAG member server in the failover datacenter. We can do so using the “Services” snap-in or the Stop-Service cmdlet. In this article, we’ll use the cmdlet:

Stop-Service ClusSvc

Figure 8: Stopping the Cluster Service on each DAG Member Server in the failover datacenter

With the cluster service stopped, we can restore the DAG using the Restore-DatabaseAvailbilityGroup cmdlet. To do so run the following command in the failover datacenter:

Restore-DatabaseAvailabilityGroup DAG01 –ActiveDirectorySite Datacenter-2

Figure 9: Restoring the DAG in the failover datacenter

The DAG will now be restored in the failover datacenter. More specifically, the DAG quorum mode will be updated (Figure 10) and the DAG member servers in the primary datacenter will be evicted from the DAG (Figure 11).

Figure 10: DAG Quorum mode is updated

Figure 11: DAG Member Servers in primary datacenter are evicted

Finally the DAG is being updated to point to the alternate witness server so that the failover datacenter can achieve quorum.

Figure 12: DAG is re-pointed to the file share witness share on the alternate witness server

The mailbox databases will now activate on server EX02 as the database copies on this server has a lower activation preference set than EX04. If one or more of the database copies on EX02 are in a unhealthy state, Exchange will instead try to activate on EX04. As can be seen in Figure 13, ten databases were activated on EX02 and two were activated on EX04.

Figure 13: Mailbox databases are activated in the Failover Datacenter

If we open the Windows Failover Cluster (WFC) console, and expand cluster core resources, we can see that these resources are now online via the IP address on the subnet associated with the failover datacenter and the witness server is now FS02 which is the file server in the filover datacenter that were configured as the alternate witness server.

Figure 14: Cluster core resources in the Windows Failover Cluster console

Since the two DAG member servers in the failover datacenter were evicted as part of the DAG restore, we now have two DAG member serves listed in the WFC console.

Figure 15: EX02 and EX04 listed in the WFC Console

Pointing DNS Records to the CAS Array in the Failover Datacenter

It’s time to update the Exchange specific DNS records that currently point to the CAS array in the primary datacenter to instead point to the CAS array in the failover datacenter. The following internal DNS records are currently pointing to the CAS array in the primary datacenter:

- Mail.exchangeonline.dk (endpoint used by Exchange clients and services)

- Smtp.exchangeonline.dk (used for inbound SMTP)

- Outlook-1.exchangeonline.dk (FQDN configured on the CAS array object in primary datacenter)

We need to update the “mail”, “smtp” and “outlook-1” DNS records so that they point to the virtual services on the load balancer in failover datacenter. In this example, we must set them to point to 192.168.6.190 instead of 192.168.2.190.

Figure 16: Virtual services on Load Balancer in the Failover Datacenter

To update the internal DNS records in Active Directory, launch the DNS Manager console and update each of the above listed records so they point to 192.168.6.190.

Figure 17: Updating internal DNS records

The following external DNS records are currently pointing to the firewall in front of the primary datacenter:

- Mail.exchangeonline.dk (endpoint used by Exchange clients and services)

- Autodiscover.exchangeonline.dk (used for automatic Outlook 2007+ and Exchange ActiveSync device profile creation plus for Outlook 2007+ features that rely on the availability service)

- Smtp.exchangeonline.dk (used for inbound SMTP)

Since enterprises uses different external DNS providers, I won’t go through the steps on how this is accomplished.

So now that internal and external DNS records have been updated, the end users will once again be able to connect to their mailboxes using various Exchange clients, right? Well that depends really. When it comes to the external DNS records there will be a delay before other DNS providers pick up the change.

For internal DNS records in Active Directory, it depends on the Active Directy topology used wihtin the organization. For instance, if end user machines are located in another Active Directory site than the one in which the Exchange 2010 servers are located, it can take up to 180 minutes as this is the default replication interval between Active Directory sites (Figure 18).

Note:

If you have a replication interval of 180 minutes, it would obviously makes sense to force replication between the respective Active Directory sites.

Figure 18: Default Replication interval for Windows 2008 Active Directory Sites

In addition to the Active Directory site replication interval, you should also factor in the DNS client cache delays. If you ping any of the DNS records from a client machine after they were updated, we will still get the old IP address as shown in Figure 19.

Figure 19: Pinging the updated DNS records from a client machine still resolves to the old IP address

If you want to force the DNS updates to a client machine manually, you can do so by clearing the DNS cache using the following command:

Ipconfig /flushdns

Figure 20: Flushing the client DNS cache

When the DNS updates have made it to the client machines, any open Outlook instances will pickup the change and re-connect to the mailbox via the CAS array in the failover datacenter. Outlook client that are launched after the DNS update has made it to the client machine also just connect to the mailbox without issues.

Outlook 2007 and Outlook 2010 clients will have OWA, ECP, EWS, OAB, OOF and availability service URLs updated so they now point to “failover.exchangeonline.dk”.

Figure 21: Exchange RPC (MAPI) URLs

Figure 22: Exchange HTTP (RPC over HTTP) URLs

The web URL in the in the Outlook 2010 backstage center will also be updated to point to “failover.exchangeonline.dk”.

Figure 23: Web Access URL in Outlook 2010

Note:

During my testings Outlook MAPI clients were prompted for credentials when trying to connect to their mailbox in the failover datacenter. However, Outlook Anywhere didn’t prompt for credentials. I’ve seen this behaviour in several environments.

Figure 24: Open Outlook clients pickup the DNS update automatically and re-connect to mailbox

Some of you might wonder how Outlook can connect to the CAS array in the failover datacetner (that outlook-2.exchangeonline.dk FQDN associated) using the outlook-1.exchangeonline.dk FQDN which is associated with the CAS array in the primary datacenter. There’s no magic involved here, the reason is simply because Exchange 2010 CAS array object isn’t very strict when it comes to this FQDN.

Users should also be able to connect to OWA and ECP using mail.exchangeonline.dk which now points to the CAS array in the failover datacenter. They will not get any certificate warnings since the common name on the certificate is the same in both datacenters.

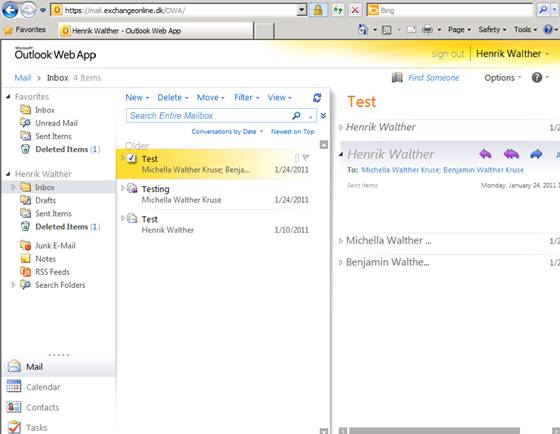

Figure 25: Accessing OWA using mail.exchangeonline.dk

When logged into OWA (Figure 26), we can now verifiy that we’re connected to the mailbox via one of the servers in the CAS array in the failover datacenter. We can do so by opening the “About” page in OWA

Figure 26: Connected to OWA via server in the CAS array in the failover datacenter

On the “About” page, we can see that we are connected to OWA using EX02, which in fact is a server in the failover datacenter.

Figure 27: Conencted to OWA via EX02 in the Failover datacenter

When it comes to Exchange ActiveSync clients they will (if supporting Autodiscover) be updated to point to “failover.exchangeonline.dk” and start synchronizing using this connection endpoint.

We have reached the end of part 12. But it’s not over yet. The last part in this multi-part article will be released soon here on MSExchange.org. In that article, I’ll show you how you switch back to the primary datacenter once it available again.

Inbound SMTP will also go through the load balancer in the failover datacenter.

If you would like to read the other parts of this article series please go to:

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 1)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 2)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 3)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 4)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 5)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 6)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 7)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 8)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 9)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 10)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 11)

- Planning, Deploying, and Testing an Exchange 2010 Site-Resilient Solution sized for a Medium Organization (Part 13)