When Microsoft brought Cortana to Windows Phone several years ago, it quickly became the source of endless amusement. Sure, Cortana can do plenty of really useful things, but I quickly discovered that Cortana had a bit of an attitude and would often answer questions in a sarcastic or humorous way.

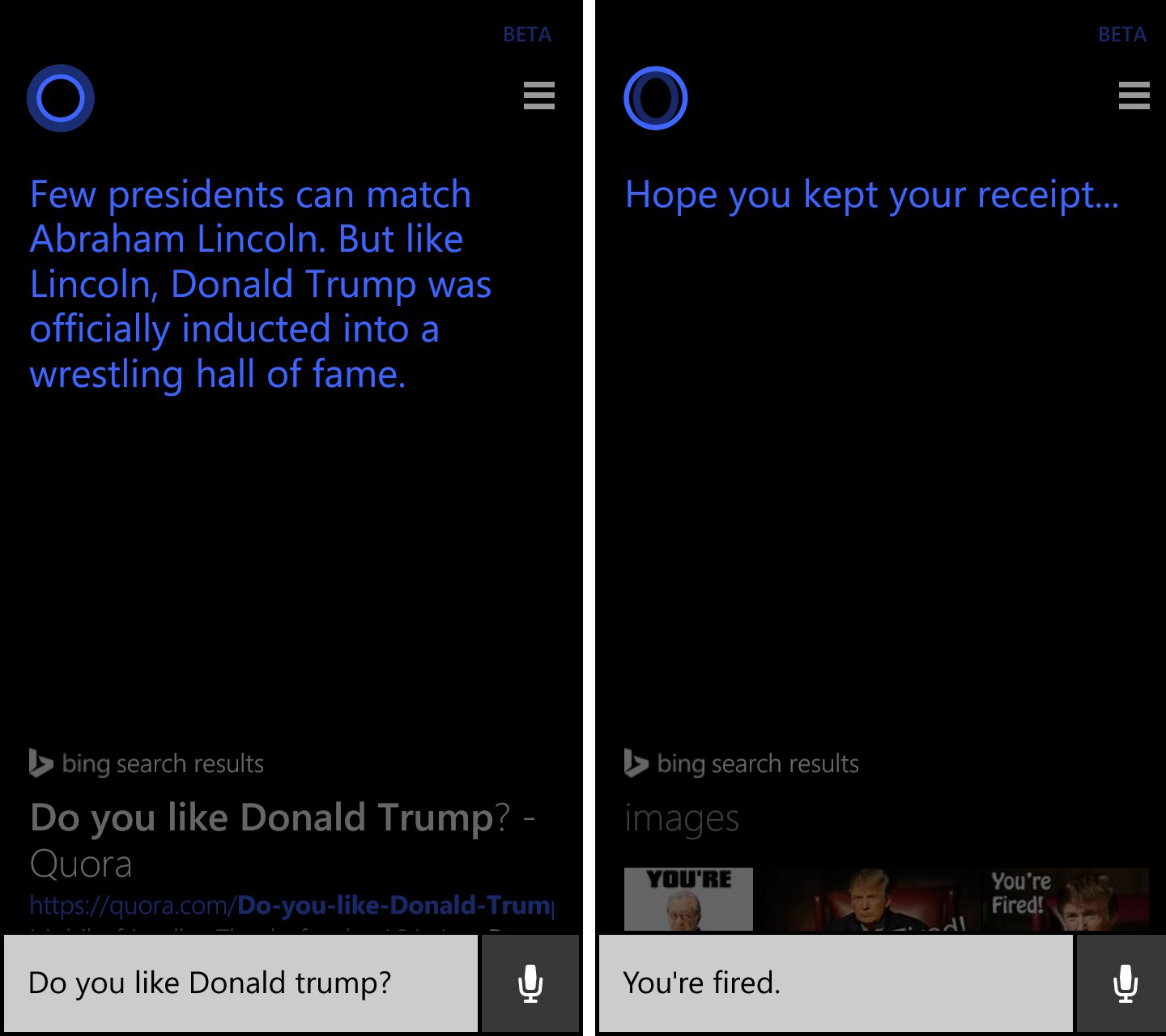

Admittedly, I spent way too much time playing with Cortana, trying to discover what it was capable of and what questions I could ask that would result in a funny answer. You can see a couple of examples below.

Apparently, I wasn’t the only one who was doing this. Websites such as this one began compiling lists of questions to try asking Cortana.

Of course, Microsoft isn’t the only company to add a personal digital assistant to their smartphones. Google has Google Now, Apple has Siri, and even Samsung is getting in on the trend with its new Bixby assistant.

Siri, Cortana, and Google Now are probably the best-known examples of artificial intelligence that have been integrated into a consumer electronic device, but these personal digital assistants were really just the beginning. Fast-forward a few years, and consumer artificial intelligence (AI) is everywhere. Devices such as Amazon Echo and Google Home have brought personal digital assistants into our living rooms.

In the back of my mind, I have often wondered if there was a way for consumer AI technology to go horribly wrong. No, I’m not talking about those tin-foil-hat conspiracy theories that the feds are using smart devices to eavesdrop on us. I’m talking about smart devices that aren’t quite smart enough to correctly interpret our intentions, resulting in confusion and maybe even utter chaos.

Certainly the potential for chaos is there. When people speak to one another, words are occasionally misunderstood, so it’s easy to see how an AI device could also misinterpret a spoken command. Microsoft CEO Satya Nadella found that out the hard way.

Cortana chaos

Nadella experienced an epic fail when he tried performing a natural language query using Cortana during a live demo in front of a huge crowd. The Microsoft CEO told Cortana to “show me my most at-risk opportunities.” Much to his dismay, Cortana interpreted the command as “Show me to buy milk at this opportunity.” After multiple attempts at performing the query, a clearly embarrassed Nadella eventually had no choice but to acknowledge that the demo wasn’t going to work.

Even if an AI device understands every word perfectly, however, the device has to make sense of the command given to it, so that it can take the appropriate course of action. In this regard, AI is sometimes lacking. Google, for instance, uses one flavor of AI to provide search suggestions as you type. Once in a while, however, Google’s search suggestions go completely off the rails. So if AI can screw up something as simple as a search suggestion, just imagine what it could do with a more complex command.

Dirty mind

As it turns out, AI-induced chaos can indeed become the order of the day. Consider, for example, what happened when a small child asked Amazon Alexa to play Digger Digger (at least I think that’s what the kid was saying). The end result was the discovery of the fact that Alexa has quite a mouth on her. In this video, Alexa begins spewing a stream of porn-related search phrases that are as diverse as they are vulgar.

In a widely publicized incident, a 6-year-old child asked Alexa, “Can you play dollhouse with me and get me a dollhouse?” So what can possibly go wrong here? Well, Alexa placed an Amazon order for a dollhouse and for some cookies. When the child’s parents discovered what happened, they put a security code in place to prevent Alexa from making any more unauthorized purchases. The family also donated the dollhouse to charity.

Now you would think that this would be the end of the story, but remember my original question? I wanted to know if consumer AI could cause chaos. An unexpected Amazon purchase is certainly an example of AI gone wrong, but I would hardly call it chaos. However, I think that what happened next definitely qualifies.

The story of the little girl who accidentally ordered a dollhouse was covered by the national media. Among the media outlets that covered the story was CW6 in San Diego. At the conclusion of the segment, one of the news anchors said, “I love the little girl saying, ‘Alexa ordered me a dollhouse.’ ” Unfortunately, “Alexa” is the activation phrase that makes the Alexa device listen for a command. In homes all over San Diego, Alexa heard the phrase, “Alexa ordered me a dollhouse” spoken on the television, and interpreted it as “Alexa, order me a dollhouse.” The end result was, you guessed it, mass ordering of doll houses by unsuspecting CW6 viewers — or at least by their Alexas.

This brings up another point. AI-related chaos isn’t always the AI device’s fault. In the case of the news story on CW6, Alexa was controlled by something that it heard on television. Even before this incident, however, there have also been documented examples of what I like to call “AI hijacking.”

Amazon isn’t the only company whose consumer AI product is designed to listen for an activation phrase. Microsoft, for example, has designed Cortana-enabled devices to listen for the phrase “Hey Cortana.” Similarly, Google Home devices listen for the phrase, “OK Google.”

As the CW6 news story demonstrated, these simple activation phrases can be exploited. In fact, the exploitation of activation phrases was going on long before the Alexa incident.

In 2013 a writer for Esquire magazine set out to review the now-defunct Google Glass. It didn’t take the reviewer’s friends long to figure out that the device’s activation phrase was “OK, Glass,” and that Google Glass did not seem to care who actually said that phrase. The reviewer said that his friends would sneak up behind him and “shout inappropriate Google searches” in an effort to populate the browser’s history with embarrassing searches. I will leave the actual search phrases to your imagination (or you can check out the article for yourself).

No laughing matter

Consumer-grade, voice-controlled artificial intelligence devices are still relatively new. Like any other new technology, AI devices are bound to occasionally behave in an unexpected manner. My hope is that companies such as Google, Amazon, and Microsoft will eventually incorporate voice-print technology into their devices so that they are able to determine whether a command was issued by an authorized user.

In the meantime, the best defense against unauthorized or unexpected device usage is to take advantage of any security controls that are built into the device. Amazon Alexa, for instance, can be configured to require a code prior to making a purchase. Another way of protecting yourself is to simply unplug the device whenever you have company.

Photo credit: Universal Pictures