Designing the security of your new cloud application/service that you are releasing to your internal or external users, and having the security applied since the inception in the design, is an excellent idea. However, one thing that may be equally important is the ability to log activities of those resources and search them afterward. The ability to look into your resources’ activities will help you increase your security posture, understand what is happening behind the scenes during regular operations, and so much more. This first article of our series on improving Azure security with Kusto Query Language (KQL) will cover the steps required to build and secure log analytics.

Creating the log analytics

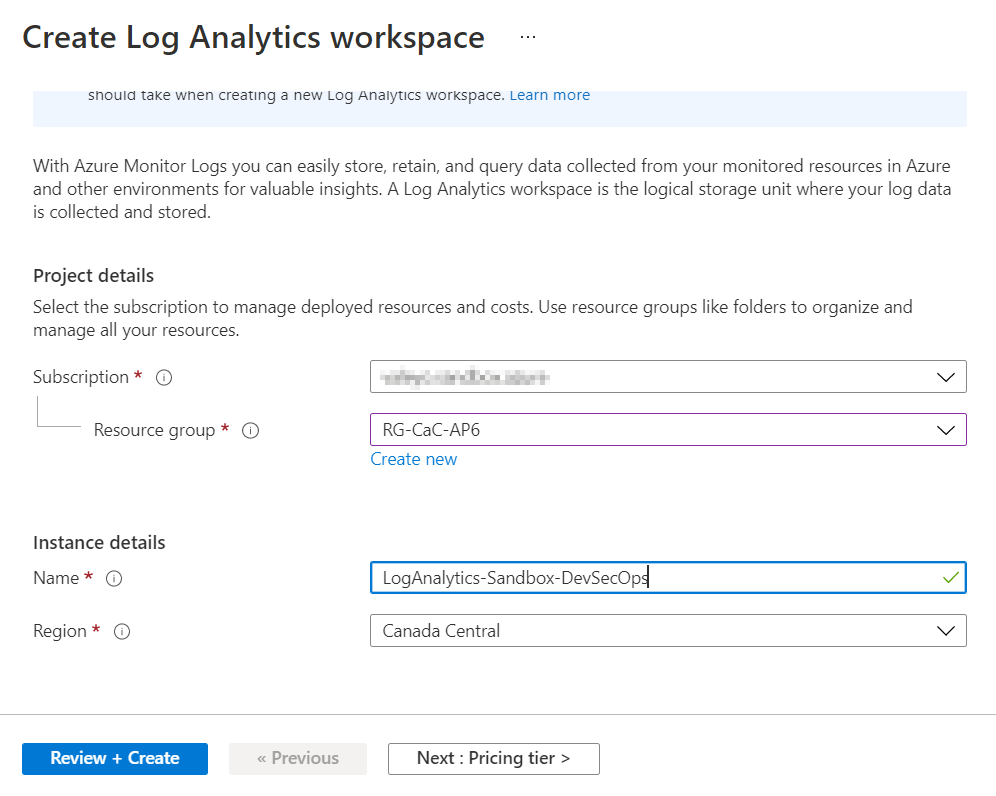

In this section, our focus will be the creation of the log analytics workspace using the Azure Portal. However, we will cover the creation of the entire additional infrastructure using PowerShell and Azure ARM templates.

You may be wondering about the nagging design questions about planning how many log analytics are required and where they should be placed, right? We will discuss some possible scenarios listing the advantages and disadvantages of each scenario. Stay tuned!

The creation of log analytics is one of the simplest tasks to be accomplished using Azure Portal, PowerShell, Azure CLI, and ARM templates. Search for log analytics workspace on the search bar, and on the new blade, click Create.

In our article series, we are going to use LogAnalytics-Sandbox-DevSecOps. In the Create log analytics workspace page. Define a subscription, resource group, region, and a name that we will assign. Click on Review + Create and wait for the provisioning process to complete. It is that simple!

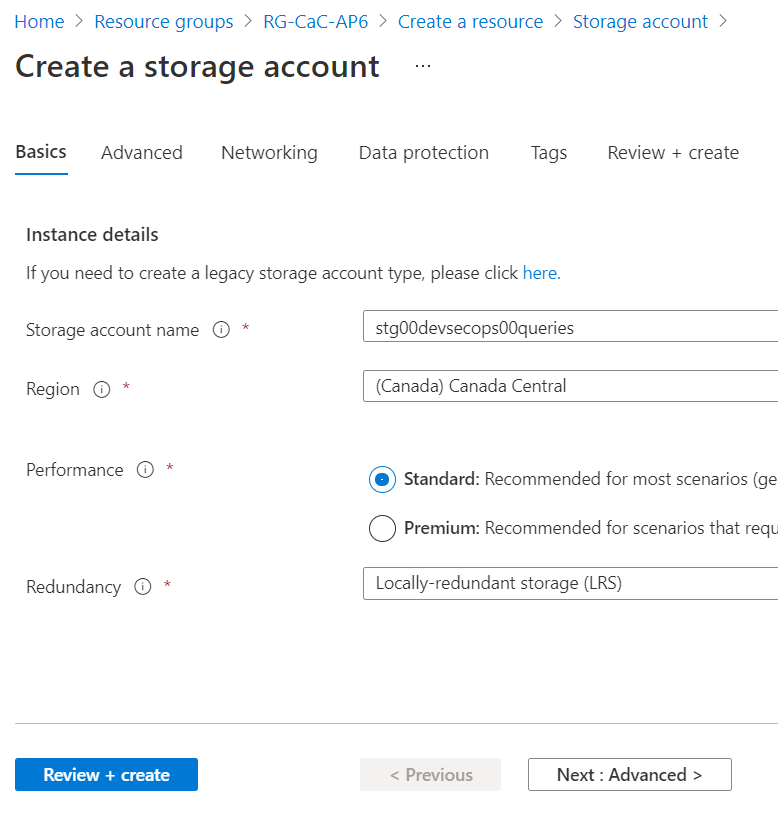

We will need some additional resources, and we will start by creating a storage account to store saved queries. In the search area, look for storage account, and create a new one in the same resource group that we have recently provisioned the log analytics workspace.

Preparing the Azure log analytics workspace for prime time

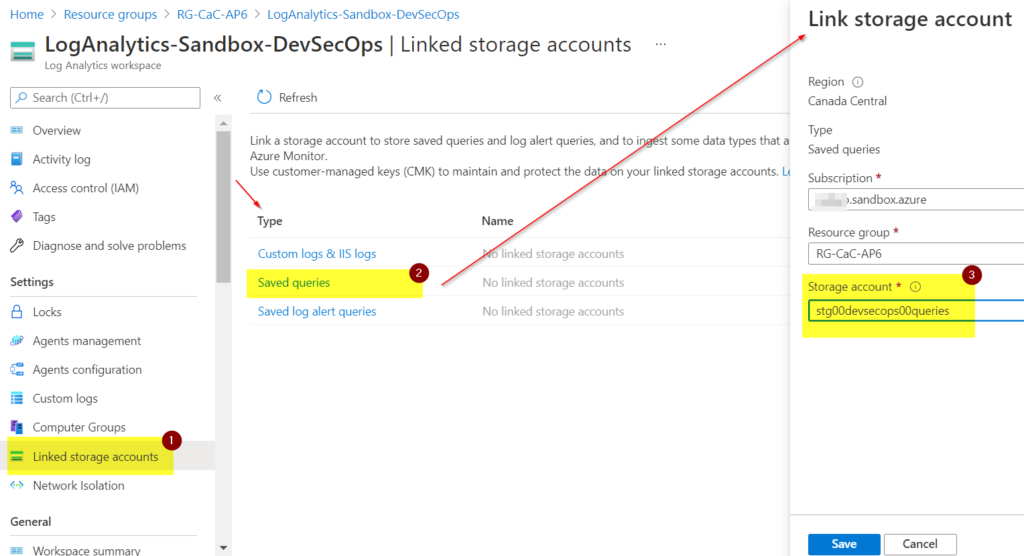

Now that we have both resources created, our first configuration is to associate a storage account to store all saved queries. In the log analytics workspace, click on Linked storage account (Item 1), then click on Saved queries (Item 2), and on the new blade, associate the storage account that we created in the previous step (Item 3, stg00devsecops00queries).

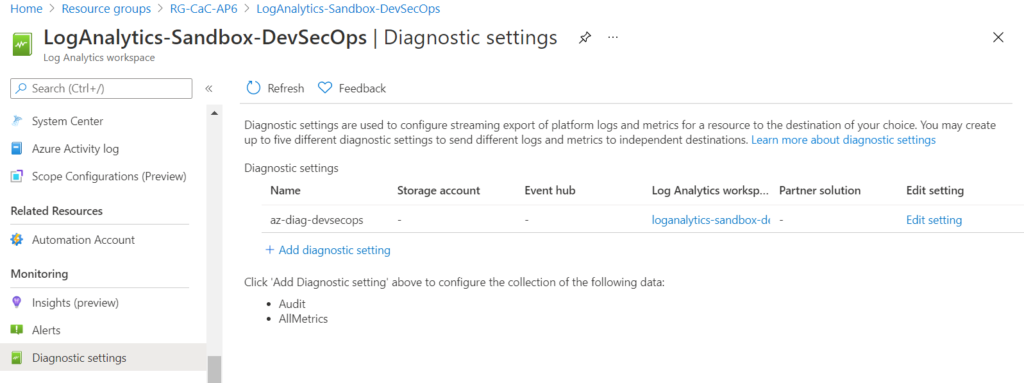

We can audit all activities in the log analytics workspace, and since this one will be specific to our DevSecOps team, we should make sure that we have auditing configured before we start sending logs over.

In the log analytics workspace, click on Diagnostic Settings, click on Add diagnostic setting, select Audit and select the same log analytics workspace. In this article series, we use az-diag-devsecops as the diagnostic setting name throughout the resources to keep consistency.

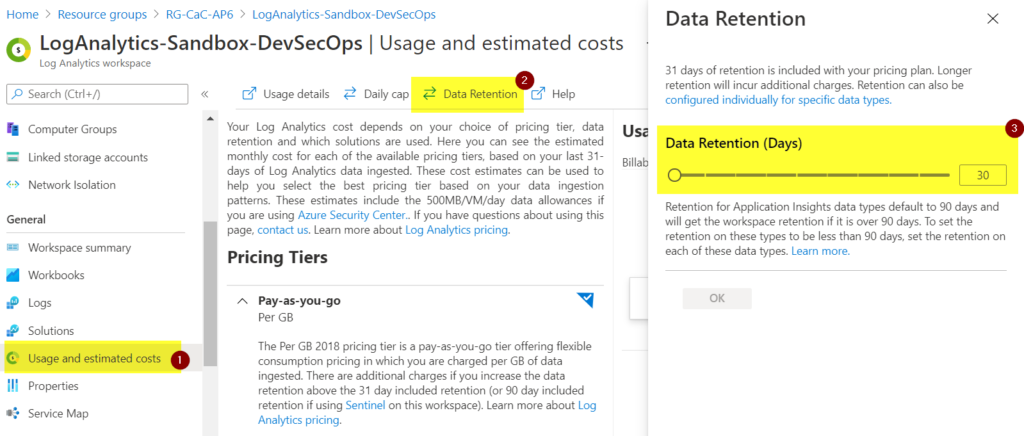

One nagging question when designing and using log analytics is how long we will keep the data. When you use pay-as-you-go (which is enabled by default), the first 31 days of retention is included in the current price, and you can increase based on your security requirements.

Want to increase the retention time? That’s easy. While you are in the log analytics workspace, click on Usage and estimated costs (Item 1), click on Data Retention (Item 2), and then define for how long you want to keep the data (Item 3). Click OK to confirm.

You can take advantage of commitment tier prices offered by Microsoft Azure. One may get a 30% discount based on the plan and the commitment of data to be ingested daily.

On the same main page, we have two useful charts that help understand the current log analytics workspace consumption. The first chart on the right shows the daily usage on the usage charts, and it breaks down the volume per solution that is providing data. The second chart is the total of data in the last 90 days.

Last but not least, we can configure a daily cap by defining the maximum GB per day that will be ingested. Although it is a nice feature to control costs, it may not be helpful in a production environment. You may want to save in other areas than in your logs.

Managing access

Log analytics is a powerful tool and, in theory, will have all diagnostic settings of any given subscription on it. The permission to access that data is crucial in your security design, and we have two options: resource or workspace.

The workspace mode dictates you have access to all logs on the workspace resource. In the resource mode, you will be able to see the logs only on the tables you have access to, and we will be using custom RBAC roles to scope specific tables to users or groups.

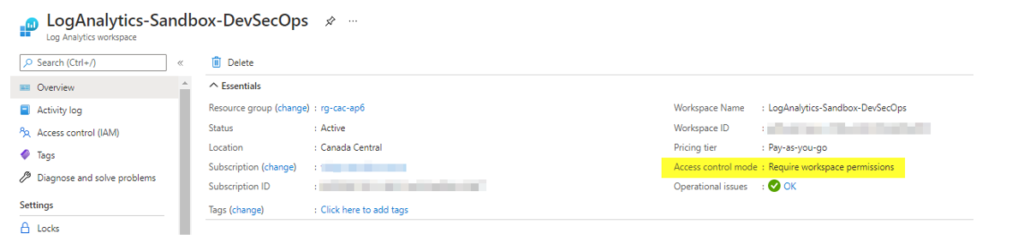

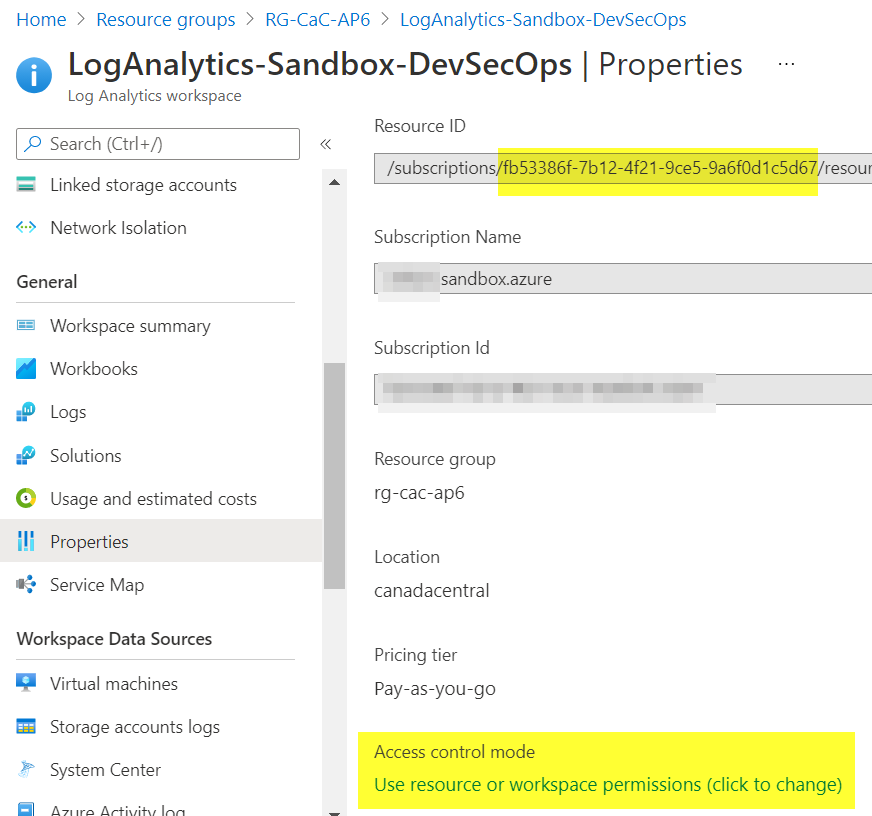

To configure the permissions in our log analytics, we can first check the current permission being configured by looking at access control mode in the Overview page, as depicted in the image below.

If we want to change it, we can go to Properties and switch the authentication by clicking on Access control mode (it is just a click on that link to switch).

Keep in mind that using Table Azure RBAC requires the creation of custom RBAC roles to be effective.

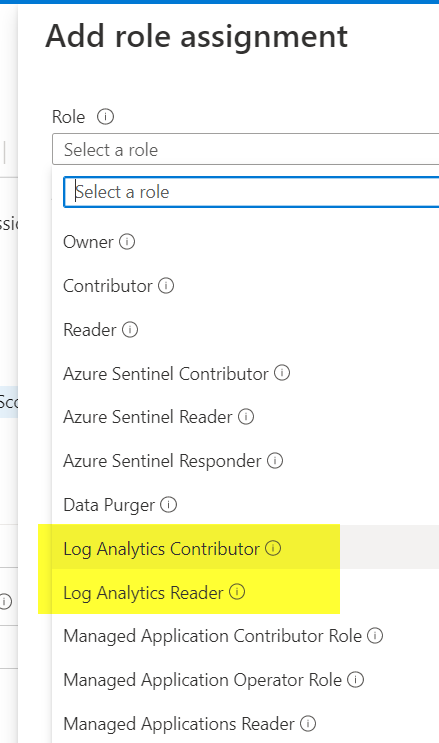

When assigning built-in RBAC roles to a log analytics workspace, we have two roles: log analytics contributor and log analytics reader. They can be available when assigning permissions, as depicted in the image below.

Stay tuned for Parts 2 and 3

This initial article of our series covered the basic steps to create our log analytics workspace using the Azure Portal and additional services to support the infrastructure. We also tackled some critical features related to the cloud security around the Azure log analytics workspace, including auditing, a brief overview of how to switch permissions of the logged data, additional storage accounts, and RBAC permissions.

Featured image: Shutterstock