In a previous article on TechGenix called Building a custom VDI stack for Windows 10, I interviewed expert James Rankin concerning the custom stack of tools and services he uses to implement VDI solutions for customers (his blog can be found here). At the conclusion of my interview, I asked James if he could show us how he might use his stack of tools and services to implement a Windows 10 VDI solution for a typical fictitious company. James replied at the time that he would be happy to do so but that it would require a second interview and some preparatory work to do this, and this article is the result of our second interview and the hard work that James did behind the scenes to prepare for it. Let’s now listen and learn from James as he implements his custom VDI stack with Windows 10 for the fictitious company named Contoso Ltd.

MITCH: James, let’s say you’ve been tasked with using your VDI stack of Cloudhouse, FSLogix Profile Containers, AppSense DataNow, a custom monitoring service, and UniPrint to implement a VDI solution for a fictitious customer like this one:

Contoso Ltd. is a training provider that has many distributed offices throughout the country, with around 2000 permanent staff and 10,000 seats dedicated to providing training. Their current infrastructure is very “traditional”, comprising physical Windows 7 endpoints with software installed manually (via Software Installation Policies) and managed by GPOs and logon scripts. What they find challenging, in particular, is having any sort of flexibility — some of their training courses require software that doesn’t sit well with other software installed on the machines, so they end up doing a lot of reimaging between courses to prepare the machines for their next usage scenario. VDI would make them so much more flexible — machines could be reimaged quickly, software could be “abstracted” away so that it doesn’t clash with other installed products, their classrooms could be used for different courses without any preparation, and performance could be improved. At the same time, their “backoffice” staff could also benefit from this, receiving a solution that not only lets them roam around the different offices with a minimum of disruption but also provides a ready-made mobile working platform. Also, there is the possibility that they could move more towards providing a remote rather than classroom-based training solution as well, using VDI as the core for this.

Given the above scenario, can you walk us through step-by-step how you might approach implementing a VDI solution for this customer using your stack of tools and services? You can keep your walkthrough at the level of a bird’s eye perspective i.e. no need to get too caught up in nitty-gritty details, but what we’d like to understand by this is how you might approach such a project and what the general series of steps should be that would bring it to a successful conclusion.

JAMES: The key part of this is always going to be the applications. Our first job would be to assess the application estate and begin the packaging process. At this point, we’d be looking out for applications that could give problems (old browser apps, add-ons, 16-bit apps and the like) in order to get them remediated and tested quickly. Usually, at this point, it is a very good idea to introduce some of the client’s staff to the packaging process and let them start doing it so that they build up familiarity with the process, and we usually find that they take to it very quickly and help us accelerate this whole stage. At the same time, we’d start putting in some SCCM infrastructure and work on building the base Windows 10 image.

Once the applications are in place, then everything starts to come together for the client and ourselves. As soon as this particular customer can see the applications being delivered “on-demand” to users rather than devices, they can instantly start to appreciate the benefits it brings. In fact in this particular situation, I’d be tempted to also allow them to run the virtualized applications on the existing estate during the migration process because it gives them so much flexibility. But obviously then at this point, we’d start to implement the thin clients (which could be bought in new or simply involve repurposing their existing endpoints) and begin running the Windows 10 VDI solution in some specific test areas.

Within the Windows 10 image we would have the relevant agents installed (FSLogix, Cloudhouse, Uniprint, DataNow and our monitoring daemon) and we would also need to extend at least one of these (the monitoring one) to the existing estate so we can start scanning for non-standard data areas. So the “pilot” phase would involve application testing on the new Windows 10 platform and data gathering on the old estate. Once this phase is finished, we will then have the information on data, profiles, and printing which we can then build up into Group Policy Objects that can enable the migration to the live environment.

Then we can begin the actual migration. We would extend the DataNow client onto the existing estate at this point and start to synchronize user data in the background. Once this is finished, the user will be marked ready for migration and we can add them to a group that triggers the process (of course, the “classroom” areas can be done on a phased basis, as there will be no user data to be managed). At this point, they will have their machines replaced or repurposed and at next logon they will fire up a virtual Windows 10 desktop. When they log in, their applications will be virtualized onto the endpoint, their profile will be mounted on a VHD across the network, their data will be represented by overlays which will sync down either on-demand or in the background, and their printer(s) should be mapped as expected.

In this sort of environment, I’d expect the “classroom” areas to be much more simple than the “backoffice” areas, so it could possibly be done in two distinct phases if resource on the client end was tight. There will, of course, always be a “mop-up” phase. Anyone who claims that they have a technology that makes migrations of this sort seamless is clearly lying! But with technologies of this type mop-up becomes easier. If you have to reimage, the user’s applications, data and profile are ready to be re-injected as soon as the SCCM build finishes, and because we wouldn’t have many applications in the image (probably just office and a browser, along with the required agents) then the Task Sequence is so much simpler and quicker. Additionally, because we have “hands-off” monitoring, the IT department can simply react to alerts of problems instead of having to spend time actively scanning for them.

It’s all about simplicity and sustainability. In this situation, once this migration finishes they can easily slot into Microsoft’s two-tiered upgrade channel and keep up-to-date without worrying about impact — they just need to test their apps as new versions come along. And that’s a good thing, because instead of constantly firefighting issues IT departments should be free to do what they’re supposed to — find new ways of enhancing the business and adding value. In this case, I’d expect this sort of solution to allow them to explore remote working and “distance learning” as new revenue streams using their Windows 10 VDI to support it.

MITCH: Can you show us a bit of how all this works?

JAMES: Don’t see why not. Here, let me fire up the company lab…OK here goes.

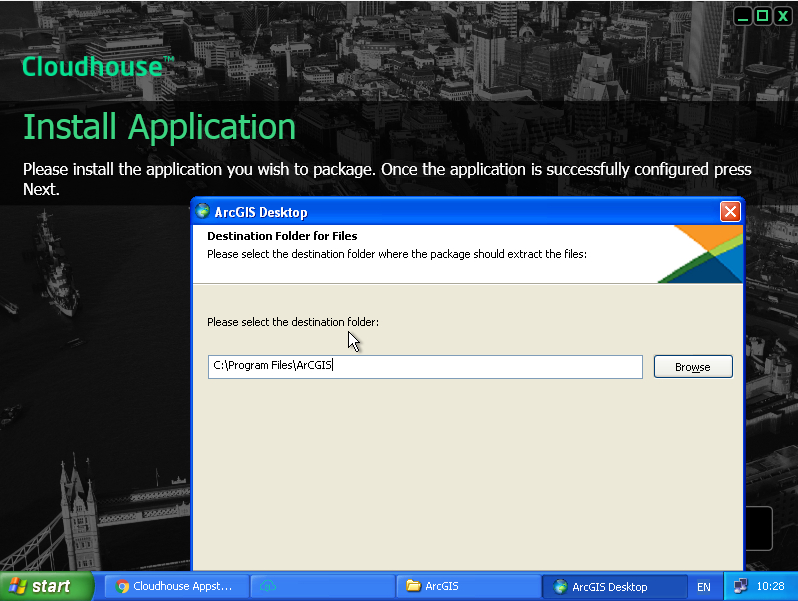

Here we are packaging up an application, using a Windows XP x86 endpoint as our base because that makes legacy apps easier (and even hard apps like ArcGIS!):

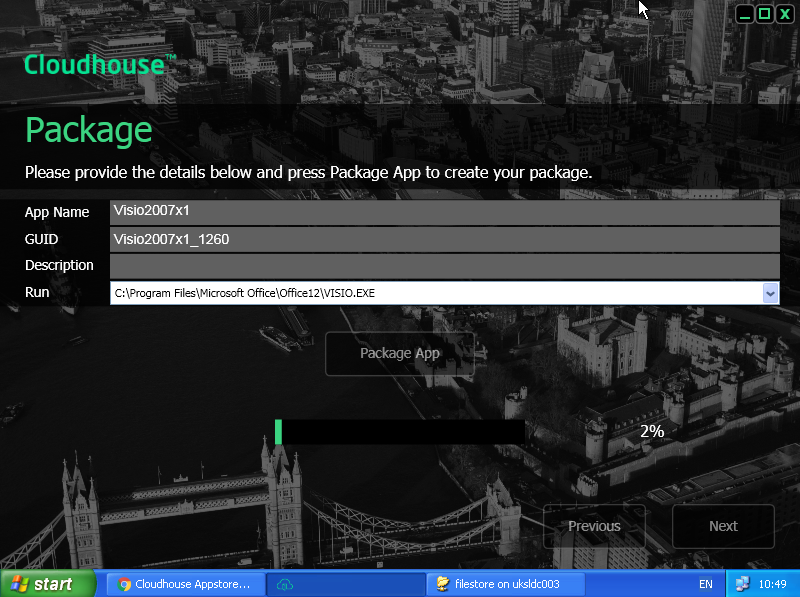

Here’s the actual application packaging up into a self-contained file:

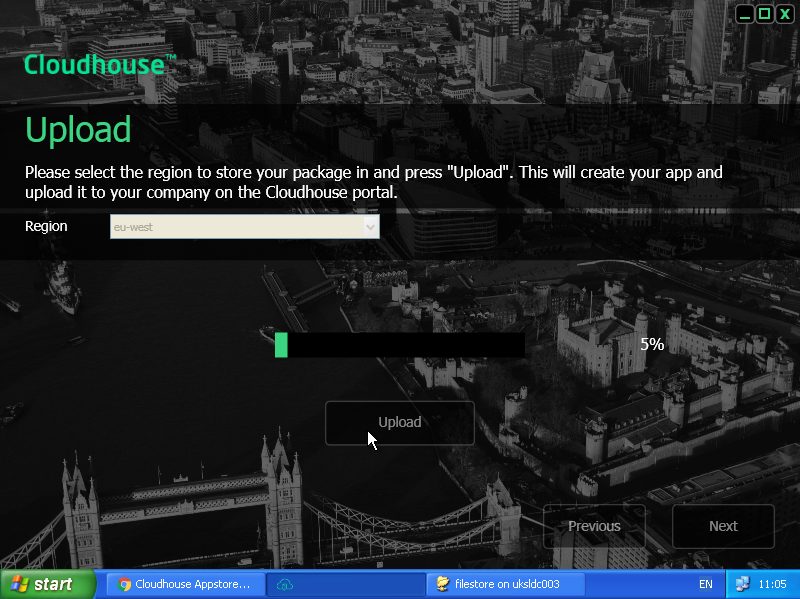

And here is the package uploading to the Azure portal for distribution to remote clients:

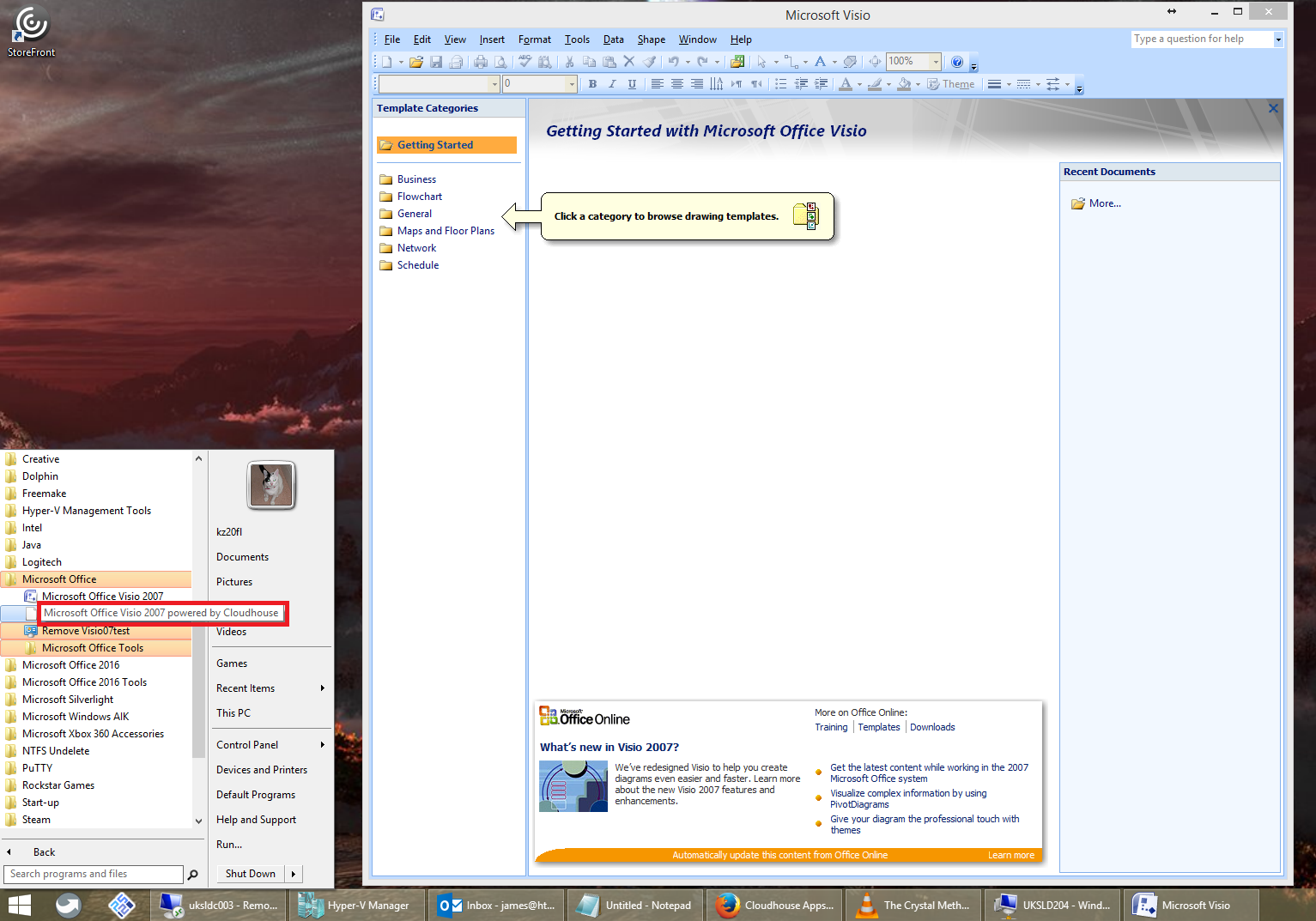

Here is a packaged application running from a remote endpoint, which can run straight from the Start Menu or direct from the Azure portal:

Here the user gets their profile personalized by FSLogix Profile Containers on an internal Windows 10 instance, with AppSense DataNow delivering files (denoted by the green overlays):

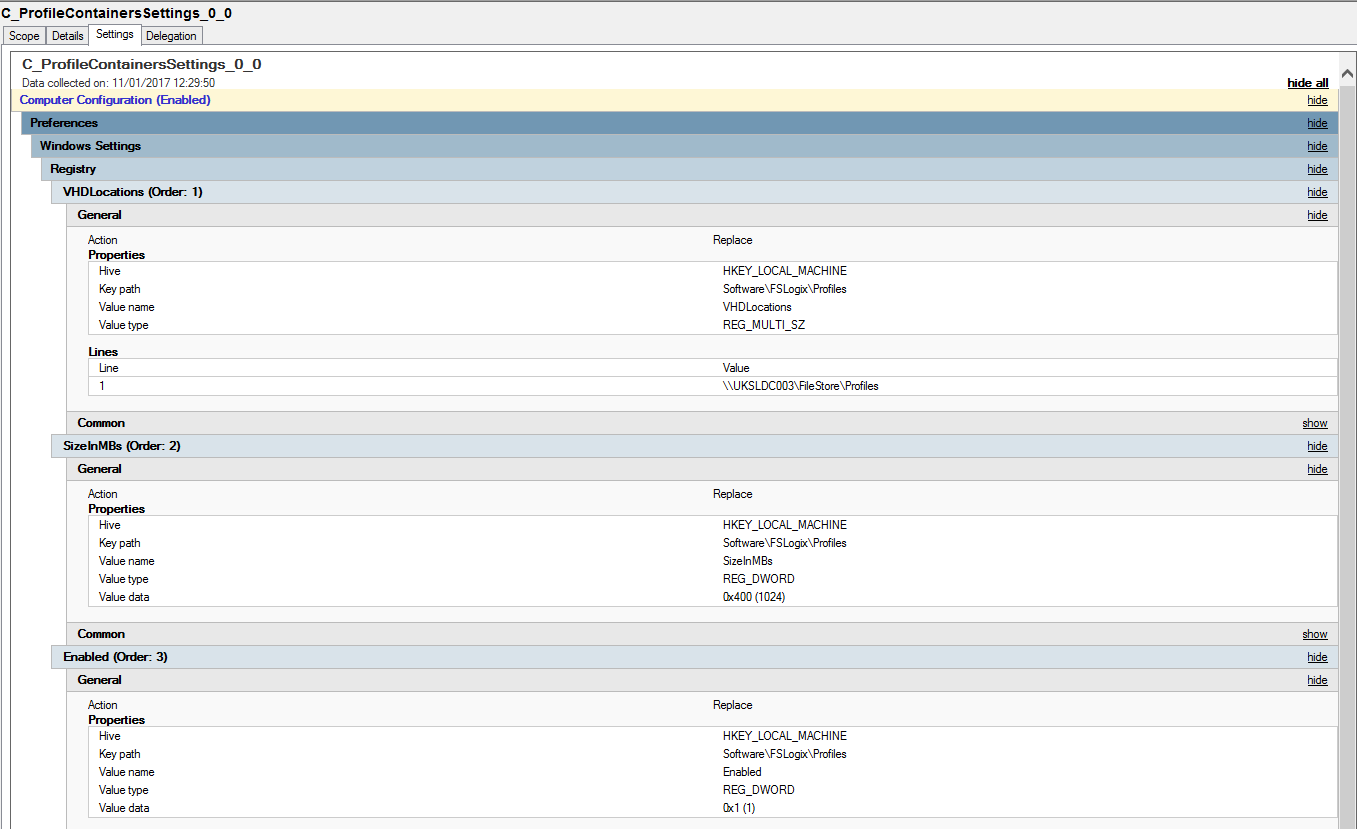

Here we see how easy it is to configure FSLogix (and DataNow, for that matter), simply by using a straightforward GPO setting:

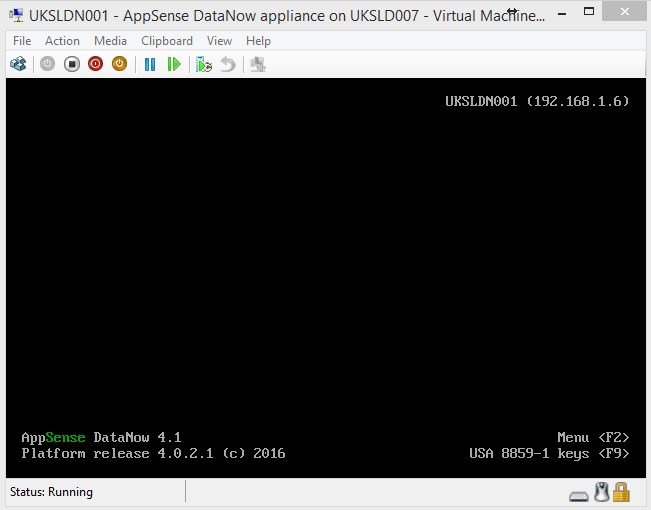

Here’s the Linux appliance that runs the DataNow infrastructure, easily importable into virtual infrastructure:

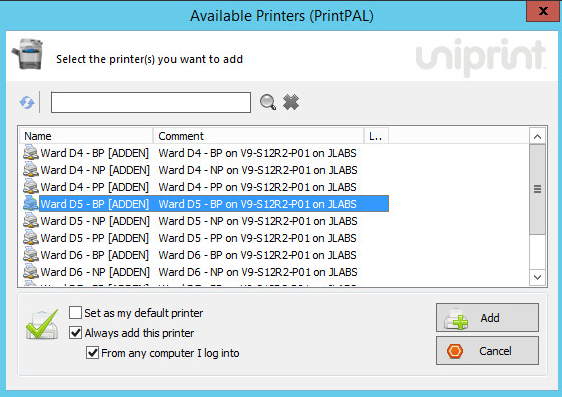

The user can easily take advantage of secure printing via UniPrint as well:

And can easily select printers to add to their session without browsing or searching:

MITCH: Fantastic, thanks, James. Moving on now, what are some of the biggest challenges you’ve had to face in your experiences with migrating customers to Windows 10 VDI solutions? I’m sure you must have encountered a few headaches that have left you either scratching your chin or pulling out your hair in frustration.

JAMES: It’s not just Windows 10 VDI — we also encounter challenges with physical estates as well when migrating to Windows 10. VDI does have challenges in that storage and networks need to be far more up to scratch and monitoring the environment becomes a case of concentrating equally as much on the client end as the server, network, and storage. You’ve got to be careful not to introduce too many complicated technologies to support a VDI stack — take a “traditional” customer and lump them with Citrix XenDesktop, Provisioning Services, something like AppSense or RES and maybe even App-V, and you’ve created a monster of resource requirements with regard to high-end technical skills. This is something we try to avoid — if you need some of the “complex” bits to fit the solution, either make sure you keep the number of complex bits down or use something that you already have skills or familiarity with. Our preferred stack is all about keeping it simple.

I think the main challenge is getting people to buy into a different way of thinking with regards to management, deployment, and testing. Virtualizing most (or all) of your applications is something people traditionally push back against. They are afraid of the unknown and worry about ending up with an estate where there is a mish-mash of technologies that all require specialist skills. But I think that is changing — the ease of virtualizing even the difficult applications is something people seem to always be impressed by. And convincing people that implementing a monitoring tool before they perform a migration project still drives me mad — I can’t understand why monitoring and baselining always seems to be last on everyone’s radar.

Of course, in an ideal world, everyone would run their applications through some sort of cloud-based provider and our lives would be so much easier. But again people have a gut reaction to it, an innate distrust of cloud services. Trying to find a way to convince them that they would probably save money by hosting their apps in Azure rather than in an on-premises solution is something we have problems trying to put across. And of course there are industries where regulation and security make them especially sensitive to data location and sovereignty, so we do have to end up going down on-premises routes sometimes.

And there are always the real PITA apps that mean you do have to layer a bit of complexity into the solution. IE6-based browser apps are a particular bugbear. For these IE6-reliant applications, we will often end up standing up legacy infrastructure either through Citrix XenApp or a VM Hosted Apps farm. This isn’t an ideal solution, but luckily these applications that rely exclusively on IE6 are becoming much rarer. IE7 onwards is simple enough for tech like Cloudhouse — it’s anything prior that gives us the issue.

Medical software can also be particularly problematic. Mainly because they are tied to exotic, unusual and expensive pieces of equipment and the vendor will only ever issue an update when the hardware fails. We’ve seen applications running on very old MS operating systems (2000 and NT, in recent memory) simply because that was the main OS when the software was written and the vendor sees no reason to change it because the hardware will last for years and years and years. Fortunately, these cases are becoming more and more rare but they still involve some real innovation and head-scratching when we bump into them.

And finally, we need to get support teams out of the habit of dealing solely with day-to-day issues and learning to concentrate on application testing and remediation. The “flights” of Windows 10 Insider builds make this somewhat easier when we get to Windows 10, but it still involves a sea-change in how they approach their day-to-day workloads. Support teams need to change their approach slightly — maybe they’re not quite DevOps, but more TestOps, in a Windows 10-based world.

MITCH: Thanks, James. How about if we finish off by letting you spout off for a bit about anything you want concerning technology and the IT profession e.g. future trends, biggest worries, your favorite smartphone app, or whatever takes your fancy.

JAMES: I love trying to predict future trends (usually quite badly). I thought “app refactoring” (software that allows you to re-skin applications to run on form factors like mobiles and tablets) was going to be a big thing, but it looks like it’s fading away, so what do I know?

But I think that containerization and virtualization of applications will finally start going mainstream this year, I think to the extent where traditionally-deployed applications really don’t matter for anything except the commonest of applications (browsers, office software, etc.) Containerizing applications and services will be big — I think that’s where Windows is headed and it’s where we need to be.

I also think that IT support staff will become more “delivery” focused than merely “keeping the lights on”. I’ve seen some amazing stuff done by people who’ve used Amazon’s Echo together with Octoblu to build servers and appliances simply by asking for them. The old notion of the “support team” building things and fighting fires is over — we’ve now got to be doing testing, remediation, vendor engagement as part of the “delivery” stream.

I think it’s all exciting though. We’re getting back to being concerned with the development of new features, with dealing with orchestration and automation rather than just fixing things that don’t work, and that means I think my job may be getting more interesting. Interesting is always good!

MITCH: Thank you, James, for taking the time to answer my questions at such a level of depth!

JAMES: You’re welcome!