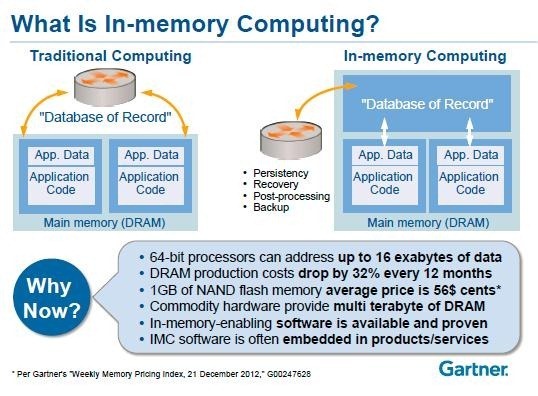

Digital transformation has ceased merely being the buzzword in futuristic technology conferences; it’s the reality staring at enterprises in the face today. Organizations are faced with the need to innovate much faster than they ever did. In-memory computing is witnessing increasing adoption because of this reason.

Delivering the fastest and most responsive results, in-memory computing has the power to significantly improve the way organizations experience and use technology. In-memory solutions are scalable across multiple servers, offer support to disk and flash storage, and can be deployed on the cloud too. No, not overseas, on the cloud!

And if you want to learn more about the cloud, do not ask Rocky Balboa (“What cloud! What cloud!” he says in the movie “Creed.”) He knows the restaurant business, boxing, and how to punch frozen meat, though!

In spite of all the potential that in-memory computing brings to the enterprise technology ecosystem, a few myths remain prevalent as ever. For any technology enthusiast, IT decision maker, or software innovator, it’s important you know better than to believe these myths.

Myth 1: In-memory solutions don’t retain data persistently

This is not true. In-memory databases that integrate solid state devices (SSDs) and disks use a part of the storage to retain a copy of the data. This means that in the case of being disconnected from the server (resulting in the data being wiped off the memory space) the data can be extracted from the disk.

Also, advanced in-memory solutions are able to replicate data across multiple nodes, delivering high availability. Because of these functionalities of recovery and high availability, in-memory solutions can be reliably deployed in operational environments. Once operating, these solutions can deliver high-speed operations without any compromises on data availability or accuracy.

Myth 2: We don’t need it

The one aspect that’s always true about technological transformations is – you’re either on-board or risk being run over by it. With in-memory computing, things are no different. However, organizations are often myopic about technologies they are not able to comprehend completely. Remember Xerox’s colossal mistake? They did not know what they invented, and Steve Jobs and his Apple colleagues just came over and took it from them for a ridiculously low price! Xerox invented the GUI and the mouse!

Arguments such as these are being vocalized in corporate board rooms and vendor negotiations all around the globe:

- We are not looking to increase processing speed.

- When we need fast processing, we will buy more RAM.

- In some years, we will replace HDDs with SDDs.

Buying more RAM as a replacement for in-memory computing doesn’t work, because they’re not alternatives of each other. Also, solid state devices offer better processing power over hard disk drives. However, at best, replacement of HDDs with SDDs will only deliver marginal improvement to your applications.

In-memory computing is about significant transformation to the speed of application operations. With the growing number of organizational functions that are performed via applications, massive and ever expanding databases, and increasing dependence on cloud applications, we have an increasing number of business cases where high speed brings more efficiency and better business results.

This necessitates a technology that delivers 10 times to 100 times speed improvements, enabling applications to deliver business benefits that were never feasible earlier.

Myth 3: In-memory computing and in-memory databases – they’re the same

Well, we’d say software vendors are to blame for this misconception, because increasingly, marketing of IT solutions has been relegated to tactics such as overwhelming organizations with information they don’t understand! Increasingly, the industry is blinded by the misconception that in-memory computing is all about SAP HANA, Oracle Exalytics, and QlikView.

In-memory computing is a technology, not a product. It’s something that technology innovators can use to create powerful products. Because enterprises are only hearing about in-memory computing in light of ERP and peripheral tools such as ones mentioned above, they believe that it’s the equivalent of databases that enable faster data retrieval.

In reality, in-memory databases are among the first (and rather simplistic) applications of in-memory computing.

Long-term growth and business benefits that in-memory computing will be able to deliver are centered on streaming-use cases. A streaming process typically involves massive rates of events entering a system, with requirements of accurate individual indexing and continuous processing in real time.

Conventional I/O disks can be overburdened by these processes, and that means in-memory computing will become a necessity for business applications that handle such massive event inflow.

In-memory computing is a technology, not a product. It’s something that technology innovators can use to create powerful products.

Myth 4: With in-memory computing, it’s necessary to store entire data in memory

That’s not true for the advanced in-memory computing solutions of today, although some primitive versions may have required the storage of entire data in memory, with memory footprint equal to the size of dataset. Now, however, in-memory databases can retain data on SSDs or hard disks in conjunction with memory. For instance, data resides on disks, and uses memory for caching. This enables netter analytics and retention, particularly for large sets of data.

When in-memory solutions use multiple media types, the extract, transform and load (ETL) operations are also made more efficient and take less time. Plus, long term as well as short term data can be stored in one system. By mixing real time data with historical data, the quality of predictive analyses that systems can do is improved manifold. No, this does not mean you can predict the future. And no, this has nothing to do with the Flux Capacitor!

Myth 5: In-memory computing solutions are too expensive for most enterprises

DRAM prices are at all-time lows, and expected to drop more in the coming years. Server and cloud vendors are in a cost battle over delivery of technology supply. Forces such as these make the present time the best for enterprises to float requests for proposals for technology solutions that use in-memory computing.

Whether your enterprise wants an on-premises tool, or you prefer a cloud deployment, in-memory computing is affordable. For performance-driven workloads, in particular, the pricing proves to be cost effective leveraging the option of distribution across several low-cost servers. With increasing use cases and decreasing prices, the proliferation of in-memory computing in enterprises of all scales, verticals, and sizes is a matter of time.

Reap the benefits

It’s time for enterprise IT decision makers to look beyond the marketing hoopla created by IT vendors around expensive products that now use in-memory databases, and instead focus on in-memory computing in its entirety. Only then can they map the benefits of the technology to their organization’s tech-driven business goals.

By digging past the superfluous layers, you can help your enterprise benefit from the tremendous potential of in-memory computing.

Photo credit: Freerange Stock