How We Got Here

The last official update to the protocol that underlies the Web, HTTP/1.1, was made back in 1999. Needless to say, 13 years later the web is a radically different place. HTTP has served well but its age has become apparent with the rise in complexity of modern web pages. HTTP’s document-centric fetch model, for example, is under strain in a world where a typical web page is assembled from dozens of images, scripts, and social widgets. Each resource retrieved typically requires a separate HTTP GET request, with all of its associated protocol overhead. Nor is HTTP’s unidirectional pull model a good fit for the modern real-time web with its near constant updates – think of the typical stock quote, news feed or multi-player game.

These and other limitations have led to three major initiatives to modernize the Web. One, Google’s SPDY, consists of several novel optimizations to the existing HTTP protocol. Another, WebSocket, is a brand new standards-track protocol that replaces HTTP for certain use cases. Both SPDY and WebSocket run over TCP and operate over the same ports, 80 and 443, as the existing HTTP{S} protocols, so firewalls are not an issue, though proxy servers introduce some complications (not unlike IPv6). Both are deployed in significant corners of today’s Web and their use (and likely standardization) is growing rapidly. Finally, Microsoft has proposed combining pieces of SPDY and WebSockets in what it calls HTTP Speed+Mobility.

In addition to these three initiatives at the application layer, much research has been done on possible Web-friendly optimizations in the TCP layer. TCP’s “Slow Start” mechanism, for example, is a significant problem on today’s Web. We discuss this more at the end but mention it here because Slow Start is one of the principle underlying causes of HTTP’s current limitations.

This present article discusses SPDY and WebSockets, as they are the furthest along, with several years of real-world practice to back them up. HTTP Speed+Mobility should be watched closely as well, especially since it’s backed by Microsoft, but at the time of writing a working implementation is not yet publicly available.

SPDY

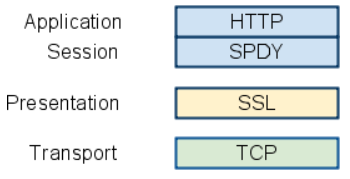

SPDY (“SPeeDY”) is a set of Google optimizations to web browsers and servers that aims to reduce the latency of page load times. SPDY keeps the semantics of the HTTP protocol, for example GET/POST/HEAD, but modifies its connection management strategies. SPDY’s core innovation is to layer a new framing protocol beneath HTTP that allows multiple requests to run over a single TCP connection:

Figure 1: SPDY protocol layering (Source: http://www.chromium.org/spdy/spdy-whitepaper)

The motivation behind this idea is the rising complexity of modern web pages to which we just alluded. Because HTTP downloads are serialized, if a web browser needs to pull in dozens of resources it quickly runs into a bottleneck. The HTTP protocol allows for up to 2 TCP persistent connections per server to give browsers a semblance of parallelism, but even this hasn’t solved the problem and, in practice, most mainstream browsers just ignore the spec and routinely open up 4-6 TCP connections per server.

For example, I browsed Microsoft’s website, ran “netstat –ab” in a command prompt and found 6 connections to one of Microsoft’s CDN properties:

Active Connections

Proto Local Address Foreign Address State

[iexplore.exe]

TCP localhost:3797 cds237.ewr9.msecn.net:80 ESTABLISHED

TCP localhost:3798 cds237.ewr9.msecn.net:80 ESTABLISHED

TCP localhost:3799 cds237.ewr9.msecn.net:80 ESTABLISHED

TCP localhost:3800 cds237.ewr9.msecn.net:80 ESTABLISHED

TCP localhost:3801 cds237.ewr9.msecn.net:80 ESTABLISHED

TCP localhost:3802 cds237.ewr9.msecn.net:80 ESTABLISHED

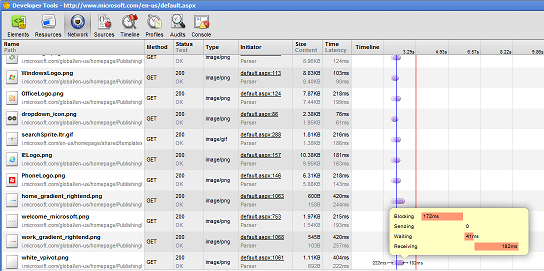

Even with 6 connections per server, browsers can quickly block if they’re downloading multiple images. Here’s another example using http://microsoft.com as viewed in a Google Chrome waterfall diagram (in Chrome, right-click to bring up Developer Tools > Network):

Figure 2: Chrome developer tools network waterfall diagram

Note the large number of image files being requested from http://i.microsoft.com and how blocking behavior has kicked in due to the high number of HTTP GET’s.

The irony of browsers violating the HTTP spec to do an end run around its limitations has been an open secret in the web / networking community for years. SPDY attempts to finally address this issue in a proper way by multiplexing numerous requests in parallel over a single TCP connection. This has the added benefit that a single connection quickly achieves its optimal TCP congestion window, even in the absence of tweaks to a server’s TCP stack of the kind discussed below under TCP Slow Start.

Another key innovation, related to request multiplexing, is request prioritization. SPDY allows browsers to prioritize a given request. Many resources in web pages, such as images, are desirable but not essential to begin displaying a page and providing crucial visual feedback to the user. It might make more sense to prioritize a Javascript download if it’s necessary to render a page and leave subsidiary images for later. Web page designers have used HTML code ordering tricks for years to try and effect prioritization – now SPDY codifies the ability of browsers to do so natively.

Note in Figure 1 above that SPDY rides over SSL. This design decision by SPDY’s developers had several motivations but perhaps the chief one was that many proxy servers won’t pass traffic over port 80 unless it’s pure HTTP.

A third core element of SPDY is HTTP header compression. Many headers are bloated (cookies, user-agents, accept strings, etc.), completely redundant after the first request, yet required by the HTTP protocol. A typical example is given below (Fig. 3). While header compression using Gzip is a well-known web optimization technique, it is not deployed nearly to the extent it could be. SPDY mandates and enforces it.

SPDY requires code changes on both clients and servers. To date, the largest production deployment involves Google’s Chrome browser and its own web properties, such as Gmail and Google search. Billions of pages have been served up in production. The SPDY landscape is evolving rapidly so the following snapshot may already be out-of-date, but here is a sampling what’s currently available:

Browsers |

Google Chrome |

Firefox |

Kindle Fire |

Websites & Servers |

Google.com (various) |

Twitter.com |

Apache (mod_spdy), nginx, node.js, Amazon Kindle Fire, F5 BIG-IP |

Table 1: SPDY support as of June 2012

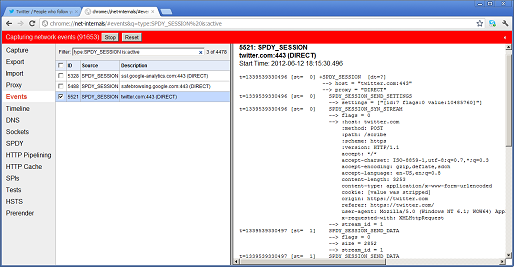

Because SPDY is still evolving and it runs over SSL it can be difficult to debug using tools such as Wireshark or Microsoft Network Monitor. Within Chrome, there is a somewhat human-readable view into SPDY at the magic URL chrome://net-internals/#spdy. After logging into Twitter from Chrome, for instance, several SPDY sessions were created:

Figure 3: Twitter SPDY sessions as seen in chrome://net-internals

WebSocket

WebSocket is an IETF standards-track protocol that is currently supported by Chrome and Firefox and promised in Microsoft’s upcoming Internet Explorer 10. The WebSocket protocol attempts to address two key shortcomings in HTTP: its unidirectional request-response model and the fact that, since each HTTP request operation is independent, there is a substantial protocol overhead due to the inclusion of the same HTTP headers in every request.

HTTP was originally conceived of as a protocol for retrieving full documents from arbitrary locations on the nascent Internet. In 1991, this was quite an accomplishment but it simply doesn’t describe today’s Web. Full duplex, bidirectional communication was a common feature of full-blown desktop apps years ago but on the Web it has never been a first-class citizen.

Until recently, browsers have had trouble doing real-time, bidirectional communication, e.g. server-to-client push. Ingenious as they are, techniques such as AJAX (client-side “asynchronous JavaScript”) and Comet (server-managed long-polling) are ultimately hacks to get around HTTP’s design limitations. Browser plug-ins such as Java and Flash provide the ability to open a TCP socket independently of the OEM browser but they have limitations, among which that they don’t always operate over ports 80 or 443 (running into firewall issues) and are not universally-deployed.

WebSocket revolutionizes HTTP by embedding the ability to open what is in effect a full-duplex socket directly into the web browser itself. I say “in effect” because WebSockets are more limited than the classic TCP socket:

They only run over TCP 80 and 443

Data is chunked into WebSocket protocol frames rather than streamed

For security reasons, data is tied to a web “origin” (which you can see in the example below)

In practice, these “limitations” might actually be an advantage in that they provide WebSockets with most of the benefits of genuine sockets but without their limitations (e.g. firewall blockage, insecure web endpoints). WebSocket requires both browsers and servers, and in certain cases proxies, to understand the protocol so its deployment is still spotty, but this state of affairs is likely to be temporary.

Here’s how a WebSocket works. The client initiates a standard HTTP connection but includes an Upgrade header in the request. RFC 6455 gives this example WebSocket handshake:

The handshake from the client looks as follows:

GET /chat HTTP/1.1

Host: server.example.com

Upgrade: websocket

Connection: Upgrade

Sec-WebSocket-Key: dGhlIHNhbXBsZSBub25jZQ==

Origin: http://example.com

Sec-WebSocket-Protocol: chat, superchat

Sec-WebSocket-Version: 13

The handshake from the server looks as follows:

HTTP/1.1 101 Switching Protocols

Upgrade: websocket

Connection: Upgrade

Sec-WebSocket-Accept: s3pPLMBiTxaQ9kYGzzhZRbK+xOo=

Sec-WebSocket-Protocol: chat

The key things to note in this example are the client requesting that the server “upgrade” HTTP to WebSocket and the server’s reply that it is, in fact, switching protocols. At the end of this handshake, HTTP is actually no longer being used and the WebSocket protocol is in effect instead. (WebSocket URLs even have their own scheme: ws:// and wss:// replace, respectively, http:// and https://).

As a historical footnote, HTTPS was also originally conceived of as a similar “upgrade” in which the Layer 4 TCP session would remain on port 80 but the Layer 7 application protocol would morph from HTTP to HTTP-over-TLS. In practice this didn’t pan out and a separate TCP port 443 is used for HTTPS. WebSocket revives this dormant protocol-switching capability that has always existed within HTTP.

Let’s take a brief look at the two big wins of WebSocket: server-to-client push and reduced protocol overhead.

For a typical news feed, stock ticker or multiplayer game, the classic pre-WebSocket trick for giving the appearance of real-time update (server push) was to do some kind of polling technique. For example, the client might use a Javascript timer that contacted the web server once every 5 seconds. This gives the illusion of real-time communication but in fact is a hack. And worse, it’s a hack that comes with a cost: HTTP is a stateless protocol and each request stands on its own, meaning that each request involves sending the same headers over-and-over again.

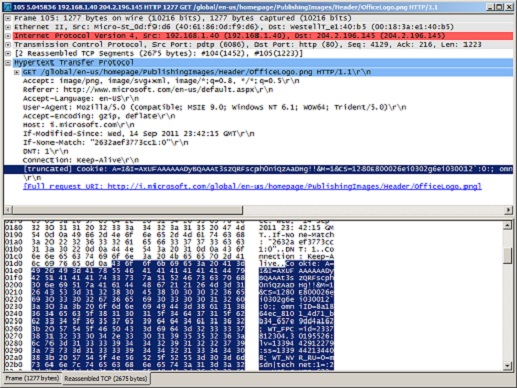

Take the Microsoft home page. Like most websites these days, the main page includes numerous resources, such as images, scripts, etc. One of the many resource requests on the Microsoft home page is for an image:

<img src=”http://i.microsoft.com/global/en-us/homepage/

PublishingImages/Header/OfficeLogo.png”

alt=”Office” width=”100%” height=”100%” class=”hpImage_Img”/>

This doesn’t look so bad, yet on the wire this simple URL, whose length is 85 bytes, travels with over 1,200 bytes of HTTP header bloat (much of which is used for a cookie):

Figure 4: HTTP header bloat

These headers are sent with every HTTP request to the i.microsoft.com domain (presumably where Microsoft stores its images). While the Microsoft home page is not a real-time app, picture a news feed in which thousands of clients are potentially polling every few seconds: the network traffic overhead has the potential to quickly become overwhelming. The WebSocket protocol eliminates all this unnecessary overhead – firstly because clients are no longer communicating in HTTP and secondly because servers can initiate connections to clients without the need for polling. A typical WebSocket “push” packet from the server to the client might contain just 5 bytes of data – other than TCP/IP framing data, the WebSocket protocol itself adds almost nothing.

One important caveat: many proxy servers don’t yet understand the WebSocket protocol (for example, they fail to understand the “Upgrade: websocket” directive). While this is expected to change, at present most WebSocket implementations try to get around the issue by using TCP/443. The assumption is that since proxy servers don’t usually have the ability to inspect an encrypted HTTPS stream, they’ll just pass along any TCP/443 traffic unobstructed.

TCP Slow Start

Slow Start is a congestion control mechanism built into TCP in which a brand new connection starts off only allowing a small (e.g. 2-3) number of unacknowledged TCP segments. As ACKs come in from the client, proving that it’s receiving the data successfully, the server slowly bumps up the congestion window – with a concomitant improvement in TCP performance (more segments can be sent before having to be acknowledged). The prevailing default setting on most of today’s operating systems of a 2-3 TCP segment initial congestion window (init_cwnd), each at an approximate size of 1460 bytes, means that web requests involving more than about 3-4 KB of data won’t fit into the initial TCP segment.

For long-lived TCP connections Slow Start isn’t a problem – the connection quickly reaches its “mature”, low-latency phase. But for the many tiny short-lived HTTP requests that constitute much of web traffic — reportedly, 90% of requests are for objects under 16 KB – a small init_cwnd translates into multiple round-trips and sub-optimal performance.

Design features of both WebSocket and SPDY take this low TCP init_cwnd into account and try to work around it, but Google researchers advocate simply increasing it out-of-the-box to 10 – which translates into about 15 KB that could be included a web server’s initial reply. That would cover close to the 90% of web requests just mentioned and provide a very simple, across-the-board performance bump to the Web.

While Google’s suggestion has yet to be incorporated into an IETF standard, apparently major real-world sites have been employing this TCP tuning trick for years. Windows doesn’t offer the same ease of TCP stack tuning as Linux, but an example of how to increase the init_cwnd on Windows 2008 R2 servers is explained here.

Final thoughts

Any major change has consequences, some of which can be foreseen and others that only emerge in the fullness of time. Here are some things to watch out for:

Will your organization’s web servers, proxies and load balancers accommodate the new protocols?

Web filtering technologies, whether used for good or ill, will need to adapt. On unencrypted WebSocket streams, for instance, new methods of deep packet inspection will need to be developed.

Do your security policies make assumptions about what kind of traffic is on TCP 80/443 that may no longer be warranted?

SPDY will increase the migration to SSL. Unencrypted HTTP traffic will increasingly become anachronistic.

Transparent proxy servers may become irrelevant with a future reduction in unencrypted HTTP/1.1 “legacy” traffic. Eventually they may just become anachronisms, serving only to increase latency and providing limited or no benefit.

Today’s web servers, load balancers and firewalls are tuned for short-lived HTTP requests. Any significant rise in WebSocket traffic, with its persisted TCP connections, will likely change the calculus.

SPDY is still under development. Nevertheless, Google already claims that it offers substantial performance improvements over HTTP and HTTPS on many metrics, including page load time and the number of bytes and packets sent. WebSocket is already an IETF standard and is started to appear in production. It is likely that part or all of SPDY will be incorporated into future IETF and/or W3C standards, possibly in conjunction with pieces of WebSocket or Microsoft’s HTTP Speed+Mobility as HTTP/2.0, especially since Microsoft and Google appear to be saying positive things in public about one another’s work.

HTTP has had an illustrious history but little has happened in the past 13 years. A major new chapter is now being written – keep tuned.